What is a Transistor?

Transistors are tiny switches that can be triggered by electric signals. They are the basic building blocks of microchips, and roughly define the difference between electric and electronic devices. They permeate so many facets of our daily lives, in everything from milk cartons to laptops, illustrating just how useful they are.

How does a transistor work?

A traditional mechanical switch either enables or disables the flow of electricity by physically connecting (or disconnecting) two ends of wire. In a transistor, a signal tells the device to either conduct or insulate, thereby enabling or disabling the flow of electricity. This property of acting like an insulator in some circumstances and like a conductor in others is unique to a special class of materials known as “semiconductors.”

Before we delve into the secret of how this behavior works and how it is harnessed, let’s gain some understanding of why this triggering ability is so important.

The utility of a signal-triggered switch

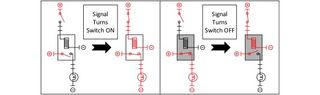

The first signal-triggered switches were relays. A relay uses an electromagnet to flip a magnetic switch. Here we see two styles of relay: one where a signal turns the switch on; the other where a signal turns the switch off:

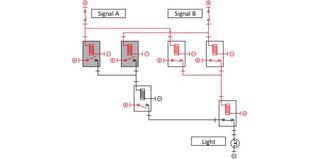

To understand how signal-triggered switches enable computation, first imagine a battery with two switches and a light. There’s two ways we can hook these up. In series, both switches need to be on for the light to turn on. This is called “Boolean AND” behavior:

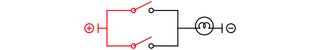

In parallel, either or both switches need to be on for the light to turn on. This is called “Boolean OR” behavior:

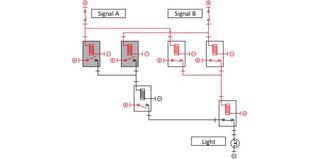

What if we want the light to turn on if either switch is on, but off if both switches or on? Such behavior is called “Boolean XOR” for “eXclusive OR.” Unlike AND and OR, it is impossible to achieve XOR behavior using on/off switches … that is, unless we have some means of triggering a switch with a signal from another switch. Here’s a relay circuit that performs XOR behavior:

Understanding that XOR behavior is what enables us to "carry the 10" when doing addition, it becomes clear why signal-triggered switches are so vital to computation. Similar circuits can be constructed for all sorts of calculations, including addition, subtraction, multiplication, division, conversion between binary (base 2) and decimal (base 10), and so on. The only limit to our computing power is how many signal-triggered switches we can use. All calculators and computers achieve their mystical power through this method.

Through looping signals backwards, certain kinds of memory are made possible by signal-triggered switches as well. While this method of information storage has taken a back seat to magnetic and optical media, it is still important to some modern computer operations such as cache.

Relay computers

While relays have been used since the discovery of the electromagnet in 1824 — particularly by the 1837 invention of the telegraph — they would not be used for computation until the 20th century. Notable relay computers included the Z1 through Z3 (1938-1941) and the Harvard Marks I and II (1944 and 1947). The problem with relays is that their electromagnets consume a lot of power, and all that wasted energy turns into heat. For this, relay computers need extensive cooling. On top of that, relays have moving parts, so they are prone to breaking.

Vacuum tubes

The successor to the relay was the vacuum tube. Rather than relying on a magnetic switch, these tubes relied on the “thermionic effect” and resembled dim light bulbs. Vacuum tubes were developed in parallel with light bulbs throughout the 19th century and were first used in an amplifying circuit in 1906. While absent of moving parts, their filaments only worked so long before burning out, and their sealed-glass construction was prone to other means of failure.

Understanding how a vacuum tube amplifies is as simple as understanding that a speaker is no more than piece of fabric that moves back and forth depending on whether the wires behind it are on or off. We can use a low-power signal to operate a very large speaker if we feed the signal into a signal-triggered switch. Because vacuum tubes work so much more quickly than relays, they can keep up with the on/off frequencies used in human speech and music.

The first programmable computer to use vacuum tubes was the 1943 Colossus, built to crack codes during World War II. It had over 17,000 tubes. Later, the 1946 ENIAC became the first electronic computer capable of solving a large class of numerical problems, also having around 17,000 tubes. On average, a tube failed every two days and took 15 minutes to find and replace.

Finally, transistors!

Transistors (portmanteaux of “transmitter” and “resistor”) rely on a quirk of quantum mechanics known as an “electron hole.” A hole is the lack of an electron at a spot where one could exist in semiconducting material. By introducing an electric signal to a transistor, electric fields are created that force holes and electrons to swap places. This allows regions of the transistor that normally insulate to conduct (or vice versa). All transistors rely on this property, but different types of transistor harness it through different means.

The first “point-contact” transistor appeared in 1947 thanks to the work of John Bardeen, Walter Brattain and William Shockley. Keep in mind, the electron was only discovered in 1878 and Max Planck’s first quantum hypothesis was only made in 1900. On top of this, high-quality semiconductor materials only became available in the 1940s.

Point-contact transistors were soon replaced by “bipolar junction” transistors (BJTs) and “field effect” transistors (FETs). Both BJTs and FETs rely on a practice known as “doping.” Doping silicon with boron creates a material that has an abundance of electron holes known as “P-type” silicon. Likewise, doping silicon with phosphorus creates a material with an abundance of electrons known as “N-type” silicon. A BJT is made from three alternating layers of silicon types, thus has either a “PNP” or “NPN” configuration. An FET is made by etching two wells of one type of silicon into a channel of the other, thus has either an “n-channel” or “p-channel” configuration. PNP transistors and n-channel transistors function similarly to “signal turns switch on” relays and tubes; likewise NPN transistors and p-channel transistors function similarly to “signal turns switch off” relays and tubes.

Transistors were far more study than vacuum tubes; so much so that no technology has yet to surpass them; they are still used today.

Integrated circuits and Moore’s Law

The first transistor computer was built in 1953 by the University of Manchester using 200 point-contact transistors, much in the style of earlier relay and vacuum-tube computers. This style of wiring individual transistors soon fell out of practice, thanks to the fact that BJTs and FETs can be manufactured in integrated circuits (ICs). This means a single block of crystalline silicon can be treated in special ways to grow the multiple transistors with the wiring already in place.

The first IC was constructed in 1971. Since that year, transistors have gotten smaller and smaller such that the amount fit into an IC has doubled roughly every two years, a trend dubbed as “Moore’s Law.” In the time between then and now, computers have permeated virtually aspect of modern life. ICs manufactured in 2013 (specifically central processors for computers) contain roughly 2 billion transistors that are each 22 nanometers in size. Moore’s law will finally come to an end once transistors cannot be made any smaller. It is projected this point will be reached once transistors reach a size of approximately 5nm around the year 2020.

Sign up for the Live Science daily newsletter now

Get the world’s most fascinating discoveries delivered straight to your inbox.

Robert Coolman, PhD, is a teacher and a freelance science writer and is based in Madison, Wisconsin. He has written for Vice, Discover, Nautilus, Live Science and The Daily Beast. Robert spent his doctorate turning sawdust into gasoline-range fuels and chemicals for materials, medicine, electronics and agriculture. He is made of chemicals.