Two New TV Breakthroughs That Will Blow Your Mind (Op-Ed)

Get the world’s most fascinating discoveries delivered straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Delivered Daily

Daily Newsletter

Sign up for the latest discoveries, groundbreaking research and fascinating breakthroughs that impact you and the wider world direct to your inbox.

Once a week

Life's Little Mysteries

Feed your curiosity with an exclusive mystery every week, solved with science and delivered direct to your inbox before it's seen anywhere else.

Once a week

How It Works

Sign up to our free science & technology newsletter for your weekly fix of fascinating articles, quick quizzes, amazing images, and more

Delivered daily

Space.com Newsletter

Breaking space news, the latest updates on rocket launches, skywatching events and more!

Once a month

Watch This Space

Sign up to our monthly entertainment newsletter to keep up with all our coverage of the latest sci-fi and space movies, tv shows, games and books.

Once a week

Night Sky This Week

Discover this week's must-see night sky events, moon phases, and stunning astrophotos. Sign up for our skywatching newsletter and explore the universe with us!

Join the club

Get full access to premium articles, exclusive features and a growing list of member rewards.

David Pedigo is the senior director of learning & emerging trends at CEDIA. Pedigo oversees CEDIA's training and certification department as well as the Technology Council, whose mission is to inform members and industry partners on emerging trends, threats and opportunities within the custom electronics sector. Pedigo contributed this article to Live Science's Expert Voices: Op-Ed & Insights.

I often get the question "Should I buy a new TV?" My answer is typically more complicated than people would like, but that's because we are on the verge — perhaps 6 to 12 months away — from seeing significant changes in television.

Thus my answer is typically, if you can wait just a bit, then no, hold off. And here's why.

For many, while the hype of 4K TV has been great, the value proposition hasn't been there. While there was a significant difference between analog TV and HDTV, unless you are sitting very close to an Ultra HD/4K TV, the differences are more subtle when compared with HDTV, except in cases of very large screens.

A closer relationship with your TV

TV, for the most part, has gone through an evolutionary process of increasing display pixel density, and now the TV in the living room or home theater is complete (though there are major caveats, but beyond the scope of this article).

To understand why, think about how humans see images. In 1886, impressionist painters Georges Seurat and Paul Signat developed a new way of painting images, called pointillism. Pointillism is a technique where a painter uses thousands of little dots which, when viewed from a distance, form an image. This process is similar to how television images are created today.

Get the world’s most fascinating discoveries delivered straight to your inbox.

In the analog days, TV broadcasts consisted of vertical and horizontal lines, and where they intersected a dot was formed, called a pixel — short for picture element. In a standard definition 480i image, there is a potential 307,200 pixels on the screen (potential because of interlaced vs. progressive scanning). When analog TVs were in their heyday, the image transmitted using interlacing (hence the "i" in 480i). In interlacing, half of the scan lines would be displayed at one time, alternating between odd and even scan lines. This was an efficient way to transmit an image, as it happened so quickly, typically at 29.97 times (frames) per second. However, interlacing — particularly in analog — degraded image quality. As televisions moved towards digital, the transmissions more often arrived in progressive scan, which is sequential scan lines, not alternating.

While 300,000 pixels sounds like a lot, once manufacturers started making televisions bigger, the picture quality suffered. Thus, just like a pointillism painting, the bigger the picture, the further away you needed to sit. This is because the increasing screen size, or painting size, proportionately increases the size of the dots.

In the 1980s, large screen TVs became a fad. For those of us old enough to remember them, the thought of moving one was dreadful, as they were just as heavy as they were big. The problem was that the recommended seating distance for optimal viewing was, at minimum, six times the screen size. Thus, if you bought a primo 50-inch big screen TV, you needed to sit 300 inches away (25 feet).

When HDTV came out, manufacturers were able to add enough pixels (between 1 million and 2 million) to be able to bring the seating distance down to three times the screen height. While the shape of the screen widened, with the same size TV, based on screen height, you only needed to sit 12 feet away to not sacrifice image quality.

However, in the last few years the term Ultra HD/4K has hit the market. Ultra HD comes in at a whopping 8.3 million pixels, and the seating distance for that same 50 inch TV drops down to 6 feet.

Now, we have reached the threshold of human vision regarding image resolution.

With pixel resolution mostly solved, the industry has moved onto the next problem: how to make better pixels. Over the last few years, manufacturers, content creators and standards bodies have been working on improving the other two main parts of an image: contrast ratio (dynamic range) and color space (gamut). Combined, these two innovations allow for a significantly greater picture, one that is significantly noticeable by viewers.

The resulting two technologies that manufacturers will promote during the next few years are high dynamic range (HDR) and wide color gamut (WCG).

HDR: Killer contrast

Dynamic range, in this context, is what most refer to as contrast ratio. Contrast ratio, in layman's terms, is the ratio between the brightest whites and the darkest blacks a display can reproduce. Despite marketing hype, a 100:1 contrast ratio, particularly on a projection system, is a very, very good image in today's video world.

However, humans can see far beyond a 10,000:1 contrast ratio, and HDR will get many displays much closer to that level. This is a very promising development because the human eye is very sensitive to changes in contrast due to the anatomical structure of the eye.

The biggest driving force behind HDR is the increasing light output of displays. The average TV of the 2000s and before had a maximum white level of 100 Nits (100 cd/m2). (A Nit is unit of measurement for light output.) The amount of Nits is the maximum light output a display produces. Taking the average black levels and 100 nits, the dynamic range of most televisions equated to roughly 7 f-stops on a camera — humans can see between 14 and 24 f-stops of contrast depending on the condition.

HDR displays, once they truly hit the market, will have the ability to hit 1,600 nits (or greater) for brief scenes, such as the sun reflecting off a metal surface or an explosion. This equates to 5 f-stop increases in brightness. At the same time, manufacturers are able to reduce black levels 400 percent, which equals an additional 2 f-stops in black levels. What this ultimately means is that high dynamic range will equate to a significantly greater image from a contrast ratio perspective, allowing us to get much closer to the desired, true, 10,000:1 contrast ratio.

Wide Color Gamut: 50 percent more hues

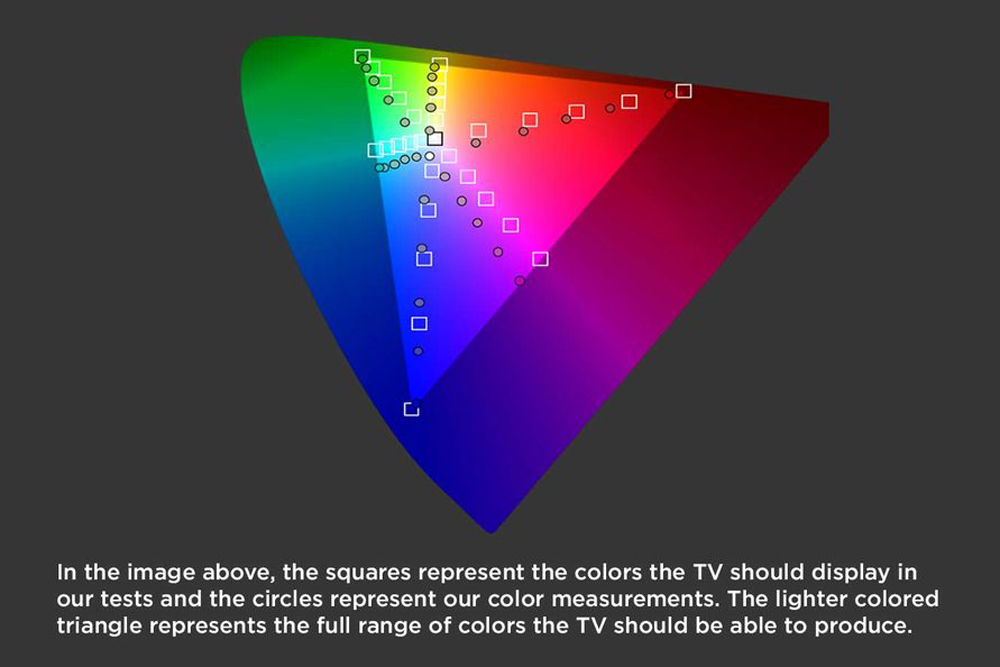

High dynamic range alone is enough to be extremely bullish about the new wave of displays hitting the market. However, it's only half of the equation. The other half of the equation is in the color that a display can reproduce. Most displays today use an ITU (International Telecommunications Union) standard called rec.709, which standardizes the maximum values of the primary colors: red, blue and green and any colors in between . (This gets a little complicated, but there are specific x and y coordinates for each primary color, with a related chart called the CIE 1931 color chart.)

Rec. 709 allows for a television to reproduce approximately 16 million colors. This is due to using an 8-bit scheme for each of the primary colors. Color TV uses a bit value (either 0 or 1) to represent varying shades of each primary color. In 8-bit, there are 256 variations of each primary color (red, blue and green). What this means, in 8-bit, is that there are 2 values (either 0 or 1) to the 8th power (2^8 = 256). Since each pixel has 256 shades of red, blue and green, there are roughly 16 million colors that a display can reproduce. While this may sound like a lot, it really is nowhere near the colors the human eye can see.

The new color space is called ITU BT.2020 and will offer 50 percent more colors than current televisions. Utilizing 10 bits for each primary color giving 1024 variations, BT.2020 allows for much deeper reds and more vibrant yellows and has 1024 variations allowing for much smoother gradients.

So what does this mean?

Thus, the combination of all these enhancements, HDR which enables a 7-fold (6,400 percent) increase in contrast ratio, Wide Color Gamut, which allows 1024 shades of each primary color through 10-bit color, and also increased pixel resolution, we are in for an amazing increase in home video experience.

Follow all of the Expert Voices issues and debates — and become part of the discussion — on Facebook, Twitter and Google+. The views expressed are those of the author and do not necessarily reflect the views of the publisher. This version of the article was originally published on Live Science.

Live Science Plus

Live Science Plus