Computer Sees Your Hipster Haircut, Sells You a Plaid Shirt (Op-Ed)

Get the world’s most fascinating discoveries delivered straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Delivered Daily

Daily Newsletter

Sign up for the latest discoveries, groundbreaking research and fascinating breakthroughs that impact you and the wider world direct to your inbox.

Once a week

Life's Little Mysteries

Feed your curiosity with an exclusive mystery every week, solved with science and delivered direct to your inbox before it's seen anywhere else.

Once a week

How It Works

Sign up to our free science & technology newsletter for your weekly fix of fascinating articles, quick quizzes, amazing images, and more

Delivered daily

Space.com Newsletter

Breaking space news, the latest updates on rocket launches, skywatching events and more!

Once a month

Watch This Space

Sign up to our monthly entertainment newsletter to keep up with all our coverage of the latest sci-fi and space movies, tv shows, games and books.

Once a week

Night Sky This Week

Discover this week's must-see night sky events, moon phases, and stunning astrophotos. Sign up for our skywatching newsletter and explore the universe with us!

Join the club

Get full access to premium articles, exclusive features and a growing list of member rewards.

This article was originally published at The Conversation. The publication contributed the article to LiveScience's Expert Voices: Op-Ed & Insights.

Researchers at the University of California, San Diego, are developing an algorithm that aims to identify whether you’re a hipster, a goth or a punk, just from the cut of your social media jib.

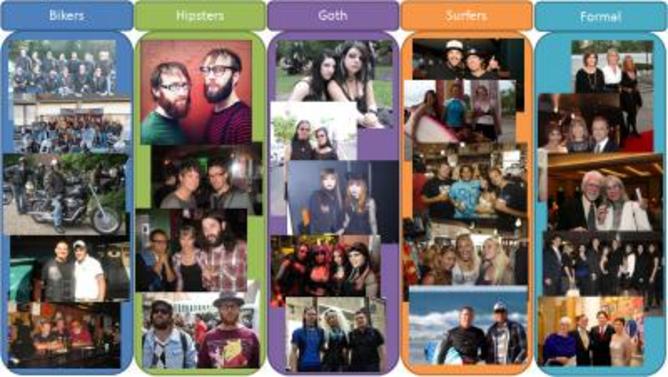

The team has been analysing pictures of groups of people in an attempt to place them within one of eight sub-cultures according to their appearance. These included hipsters, goths, surfers and bikers.

By looking out for trendy haircuts, telltale tattoos and jewellery, the algorithm is being trained to make assumptions about you based for example on your social media pictures.

Websites can then offer you a more tailored experience. A surfer might be given recommendations about holidays and a punk updated on gigs for their favourite band. And what better way for a hipster to make sure they stay ahead of the curve than to be updated on the very latest in organic, fairtrade coffee products, as and when they come on the market?

How it works

The researchers are using what is known as a multi-label classification algorithm. These are widely used in vision analysis to draw conclusions from clues that are found in images. It takes a set of photos, each with their own label, such as “cat”, “car”, “emo”, and then finds the features in the photos that best predict the label of a new photo. The algorithm leverages the assumption that pictures with a similar set of feature values are likely to have similar labels.

So if it looks at a picture, sees a pair of horn-rimmed glasses, a waxed moustache and a lumberjack shirt, and is told that it is looking at a hipster, it can move on to a new photo and identify a quinoa lover just from their look.

Get the world’s most fascinating discoveries delivered straight to your inbox.

The researchers say the algorithm is 48% accurate on average, while chance would get answers right only 9% of the time. If you were to guess the content of a picture (without seeing it), then you will guess the correct answer once every 11 times on average. This machine can do better than that but not as well as a human using the full power of their street savvy.

The algorithm uses a “parts and attributes” approach, by breaking down each picture into a set of feature values. In this case, features such as the head, neck, torso and arms of each subject were scanned for attributes such as tattoos, colours, haircuts and jewellery.

The algorithm then uses the labelled pictures to learn a classifier. This type of learning problem would be perfectly suited to the machinery of Google, in that it might be possible to find the features indicative of particular social groups without needing to manually state the types of features such as face, head, top of the head (where a hat would be), neck, torso and arms.

What it’s for

The idea is that if an algorithm can identify the kind of person you are from how you look, sites can offer you a more personally tailored experience.

There are some problems to this approach though. For a start, a 48% accuracy means that a Facebooking goth would be fairly likely to get ads for fixed-wheel bike repairs cropping up in their feed by mistake were the technology be deployed in its current state. While 48% is better than chance, the researchers want their algorithm to perform as well as a human would and plan to keep working to improve its accuracy.

But then comes the deeper question of whether you can really make assumptions about what a person is interested in based on how they look. Just because a goth likes to dress in black doesn’t necessarily mean their taste in hobbies isn’t more closely aligned to those of a surfer.

We have to ask ourselves if we want our internet experience to be tailored in this way. Ads and search results that have been tailored according to our gender can already be irritating. Often is seems that Facebook thinks that just because a user is a woman, she will automatically be interested in news about celebrity diets.

While it may be useful to identify a user’s tribe to understand them better, how that information is used depends on certain assumptions about what that tribe likes. As any hipster will tell you, that can change in an instant.

Matthew Higgs is affiliated with University College London.

This article was originally published at The Conversation. Read the original article. The views expressed are those of the author and do not necessarily reflect the views of the publisher. This version of the article was originally published on LiveScience.

Live Science Plus

Live Science Plus