Brain-Reading Devices Could Kill the Keyboard

Get the world’s most fascinating discoveries delivered straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Delivered Daily

Daily Newsletter

Sign up for the latest discoveries, groundbreaking research and fascinating breakthroughs that impact you and the wider world direct to your inbox.

Once a week

Life's Little Mysteries

Feed your curiosity with an exclusive mystery every week, solved with science and delivered direct to your inbox before it's seen anywhere else.

Once a week

How It Works

Sign up to our free science & technology newsletter for your weekly fix of fascinating articles, quick quizzes, amazing images, and more

Delivered daily

Space.com Newsletter

Breaking space news, the latest updates on rocket launches, skywatching events and more!

Once a month

Watch This Space

Sign up to our monthly entertainment newsletter to keep up with all our coverage of the latest sci-fi and space movies, tv shows, games and books.

Once a week

Night Sky This Week

Discover this week's must-see night sky events, moon phases, and stunning astrophotos. Sign up for our skywatching newsletter and explore the universe with us!

Join the club

Get full access to premium articles, exclusive features and a growing list of member rewards.

The QWERTY keyboard has dominated computer typing for more than 40 years, but a new breakthrough that translates human thought into digital text may spell the beginning of the end for manual word processing. A first step toward such mind-reading has come from using brain scans to identify certain thoughts with certain words.

The fMRI brain scans showed certain patterns of human brain activity sparked by thinking about physical objects, such as a horse or a house. Researchers also used the brain scans to identify brain activity shared by words related to certain topics — thinking about "eye" or "foot" showed patterns similar to those of other words related to body parts.

"The basic idea is that whatever subject is on someone's mind — not just topics or concepts, but also emotions, plans or socially oriented thoughts — is ultimately reflected in the pattern of activity across all areas of his or her brain," said Matthew Botvinick, a psychologist at Princeton University's Neuroscience Institute.

Brain-reading devices would likely first help paralyzed people such as physicist Stephen Hawking, but still won't happen for years, Botvinick cautioned. There is also the problem of making brain scan technologies more portable, if ordinary people hope to get a shot at freeing up their hands from typing.

Yet Botvinick envisioned a future where such technology could translate any mental content about not just objects, but also people, actions, abstract concepts and relationships.

One existing technology allows patients suffering from complete paralysis — known as locked-in syndrome — to use their eyes to select one letter at a time to form words. Another lab prototype allows patients to make synthesized voices by using their thoughts to create certain vowel sounds, even if they can't yet form coherent words. But truly direct thought-to-word translation remains out of reach.

That's where the current work comes into play. Botvinick had first worked with Francisco Pereira, a Princeton postdoctoral researcher, and Greg Detre, a researcher who obtained his Ph.D. from Princeton, on using brain-activity patterns to reconstruct images that volunteers viewed during a brain scan. But the research soon inspired them to try expressing certain elements in words rather than pictures.

Get the world’s most fascinating discoveries delivered straight to your inbox.

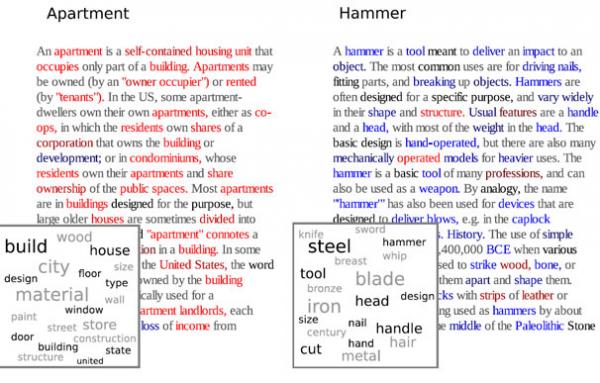

First, they used a Princeton-developed computer program to come up with 40 possible topics based on Wikipedia articles that contained words associated with such topics. They then created a color-coded system to identify probability of certain words being related to an object that a volunteer thought about while reading a Wikipedia article during a brain scan.

In one case, a more red word showed that a person was more likely to associate it with "cow." A bright blue word suggested a strong connection to "carrot," and black or grey words had no specific association.

There are still limits. The researchers can tell if participants had thought of vegetables, but can't distinguish between "carrot" versus "celery." They hope to make their method more sensitive to such details in the future.

This story was provided by InnovationNewsDaily, sister site to LiveScience. Follow InnovationNewsDaily on Twitter @News_Innovation, or on Facebook.

Live Science Plus

Live Science Plus