Get the world’s most fascinating discoveries delivered straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Delivered Daily

Daily Newsletter

Sign up for the latest discoveries, groundbreaking research and fascinating breakthroughs that impact you and the wider world direct to your inbox.

Once a week

Life's Little Mysteries

Feed your curiosity with an exclusive mystery every week, solved with science and delivered direct to your inbox before it's seen anywhere else.

Once a week

How It Works

Sign up to our free science & technology newsletter for your weekly fix of fascinating articles, quick quizzes, amazing images, and more

Delivered daily

Space.com Newsletter

Breaking space news, the latest updates on rocket launches, skywatching events and more!

Once a month

Watch This Space

Sign up to our monthly entertainment newsletter to keep up with all our coverage of the latest sci-fi and space movies, tv shows, games and books.

Once a week

Night Sky This Week

Discover this week's must-see night sky events, moon phases, and stunning astrophotos. Sign up for our skywatching newsletter and explore the universe with us!

Join the club

Get full access to premium articles, exclusive features and a growing list of member rewards.

Milestone: Transistor patented

Date: Oct. 3, 1950

Where: Bell Labs; Murray Hill, New Jersey

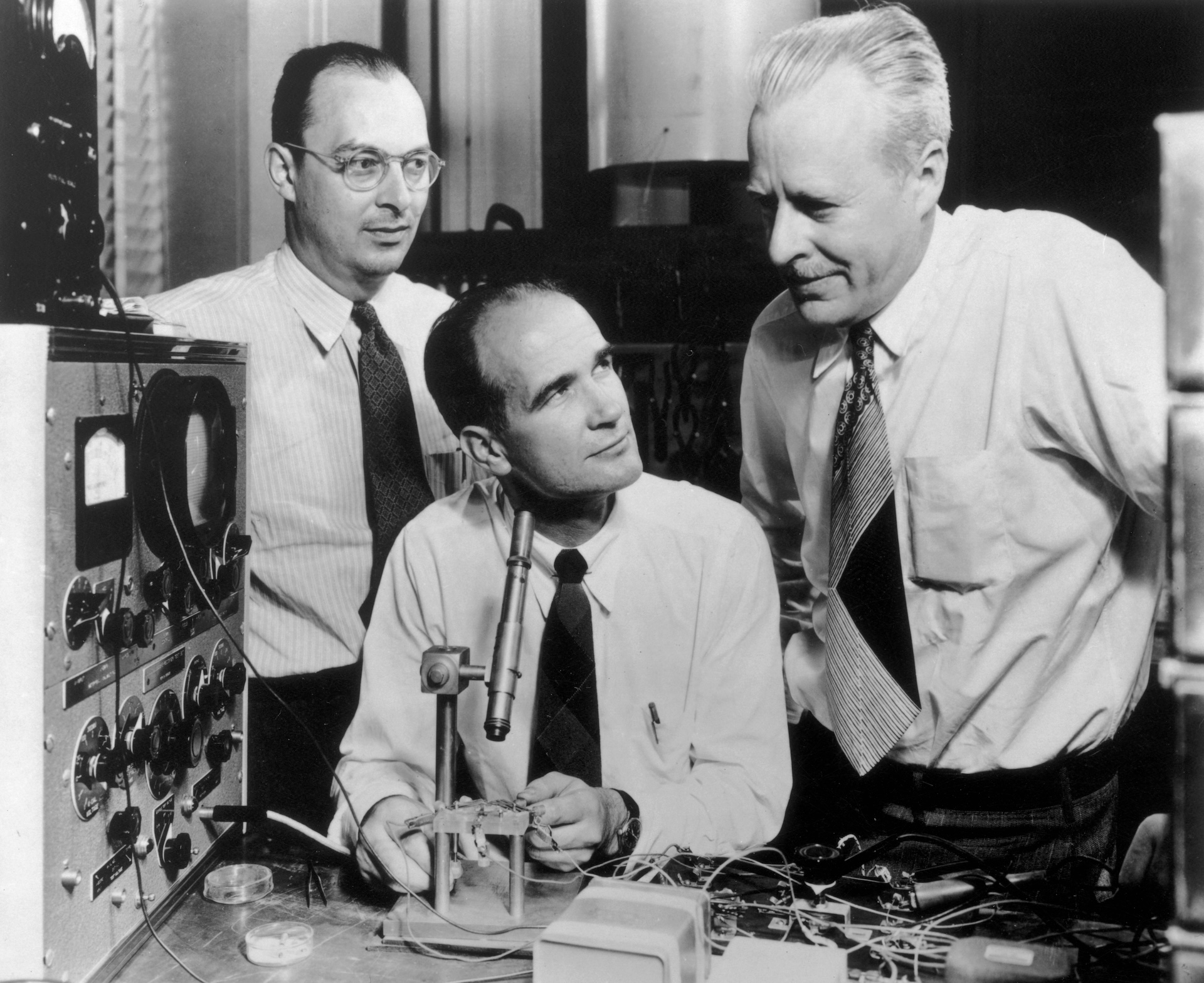

Who: John Bardeen, Walter Brattain and William Shockley

On Oct. 3, 1950, three scientists at Bell Labs in New Jersey received a U.S. patent for what would become one of the most important inventions of the 20th century — the transistor.

John Bardeen, Walter Brattain and William Shockley had submitted the patent application for a "three-electrode circuit element utilizing semiconductor materials" two years earlier, and it would be another few years before the full significance of this technology became clear.

The transistor was initially designed because AT&T wanted to improve its telephone network. At the time, AT&T amplified and transmitted phone signals using triodes. These devices encased a positive and negative terminal and a wire mesh in a vacuum tube, which ensured electrons could flow without bumping into air molecules.

But triodes were power hogs that often overheated, so by the 1930s, Bell Labs President Mervin Kelly began to look for alternatives. He was intrigued by the potential of semiconductors, which have electrical properties between those of insulators and conductors. In 1925, Julius Lilienfeld had patented a semiconductor precursor to the transistor, but it used copper sulfide, which was unreliable, and the underlying physics were poorly understood.

At the end of World War II, as the lab shifted its focus from war technology, Kelly recruited a team, led by Shockley, to find a replacement for vacuum-tube triodes. The team conducted a number of experiments, including plunging silicon into a hot thermos, with limited success. The problem was that they didn't get much amplification.

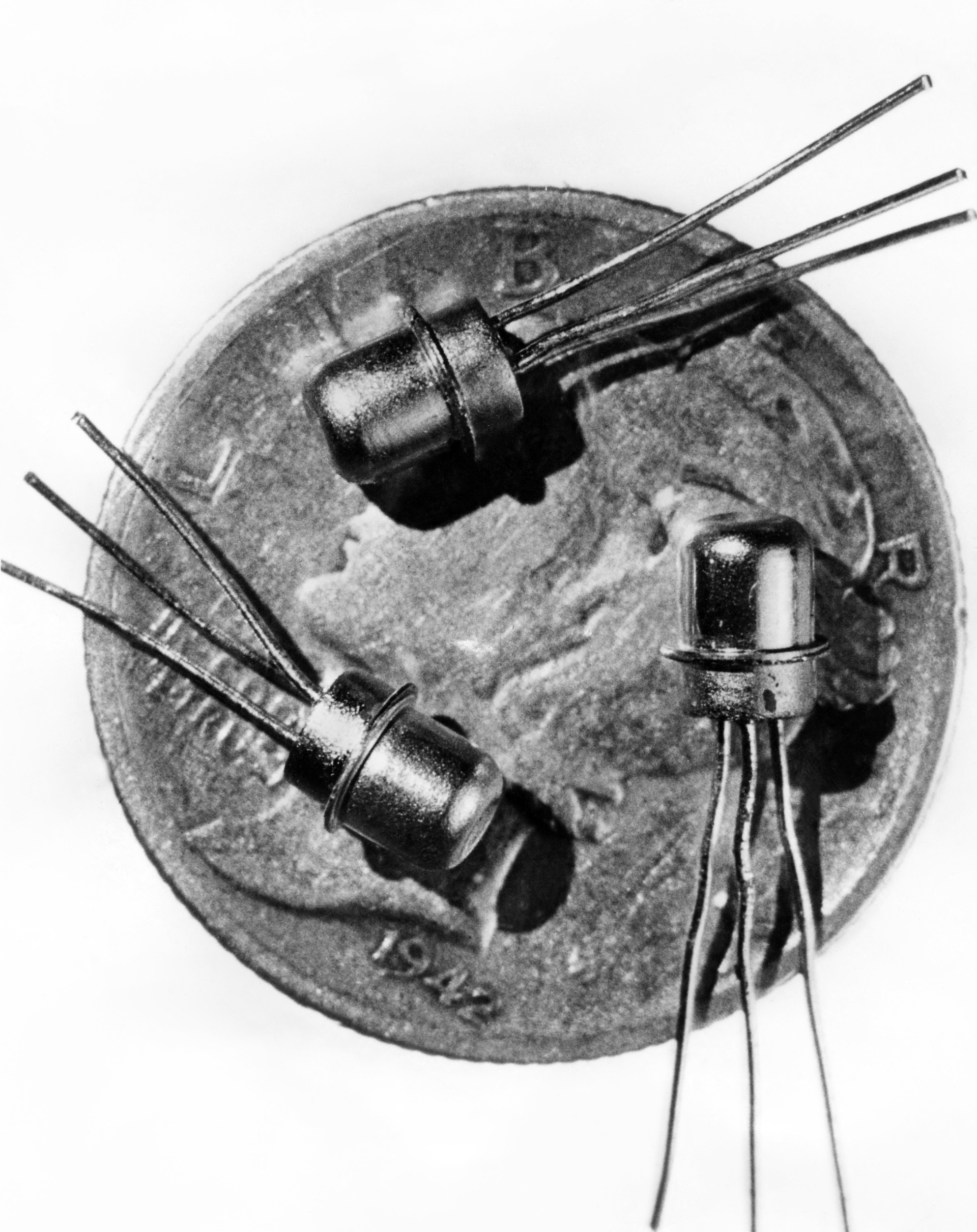

Then, in 1947, Brattain and Bardeen switched from silicon to germanium and helped clarify the physics at play in the semiconductor. Their work led to a "point-contact" transistor that used a little spring to press two thin slips of gold foil into a germanium slab. Notably, this early transistor took some finessing to work, requiring Brattain to wiggle things "just right" to get the impressive 100-fold amplification in signal.

In 1948, Shockley iterated on that design with what would later be termed the junction transistor, the subject of the patent that would go on to form the basis of most modern transistors.

Get the world’s most fascinating discoveries delivered straight to your inbox.

The key to the technology is that when a voltage is applied to a semiconductor, electrons migrate within the material, leaving positively charged "holes" behind, according to the patent.

Thus, it's possible to create "N-type" or "P-type" semiconductors — areas that carry an excess of either negative or positive charges. When a metal electrode contacts a semiconductor, the current flow would go one way if touching an N-type material and the opposite direction in a P-type material, the patent noted.

The junction transistor takes advantage of this property with a semiconductor with three attached electrodes. By modifying the voltage applied and the properties of the electrodes and the semiconductor, it's possible to reliably amplify the current. This amplification would soon prove invaluable in radios, televisions and telephone networks.

But amplification isn't what ushered in the era of modern computing. Rather, the junction transistor was a tiny, reliable, low-power, "on-off" switch that didn't heat up much. Vacuum tubes were the switches in the first computers, and the transistor was just a much better on-off switch.

Shockley was a notoriously bad boss (and a eugenicist and racist). The key researchers went their separate ways, with Bardeen moving to the University of Illinois and Shockley helping to found the modern Silicon Valley semiconductor industry. The trio would win the 1956 Nobel Prize in physics for their work on the "transistor effect."

A few years later, physical chemist Morris Tanenbaum, who worked briefly under Shockley at Bell Labs, would invent the first silicon transistor. In 1959, Jack Kilby of Texas Instruments filed a patent for the first integrated circuit, which would form the basis for the modern computer chip. And by the early 1960s, the vacuum-tube computer was functionally extinct.

In 1968, Gordon Moore, the founder of Intel, noted in a talk that transistors were being miniaturized and chips were getting twice as powerful at a predictable rate, ushering in the era of Moore's law, which would continue for another four decades.

But with Moore's law now obsolete and AI demanding ever-more-powerful computing, scientists are banking that quantum computers — which can encode multiple quantum states in a qubit, or "quantum bit" — will usher in the next era of computing.

Tia is the editor-in-chief (premium) and was formerly managing editor and senior writer for Live Science. Her work has appeared in Scientific American, Wired.com, Science News and other outlets. She holds a master's degree in bioengineering from the University of Washington, a graduate certificate in science writing from UC Santa Cruz and a bachelor's degree in mechanical engineering from the University of Texas at Austin. Tia was part of a team at the Milwaukee Journal Sentinel that published the Empty Cradles series on preterm births, which won multiple awards, including the 2012 Casey Medal for Meritorious Journalism.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Live Science Plus

Live Science Plus