Quantum record smashed as scientists build mammoth 6,000-qubit system — and it works at room temperature

A new system, made by splitting a laser beam into 12,000 tweezers and trapping 6,100 neutral atom qubits, hit new heights for coherence times.

Get the world’s most fascinating discoveries delivered straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Delivered Daily

Daily Newsletter

Sign up for the latest discoveries, groundbreaking research and fascinating breakthroughs that impact you and the wider world direct to your inbox.

Once a week

Life's Little Mysteries

Feed your curiosity with an exclusive mystery every week, solved with science and delivered direct to your inbox before it's seen anywhere else.

Once a week

How It Works

Sign up to our free science & technology newsletter for your weekly fix of fascinating articles, quick quizzes, amazing images, and more

Delivered daily

Space.com Newsletter

Breaking space news, the latest updates on rocket launches, skywatching events and more!

Once a month

Watch This Space

Sign up to our monthly entertainment newsletter to keep up with all our coverage of the latest sci-fi and space movies, tv shows, games and books.

Once a week

Night Sky This Week

Discover this week's must-see night sky events, moon phases, and stunning astrophotos. Sign up for our skywatching newsletter and explore the universe with us!

Join the club

Get full access to premium articles, exclusive features and a growing list of member rewards.

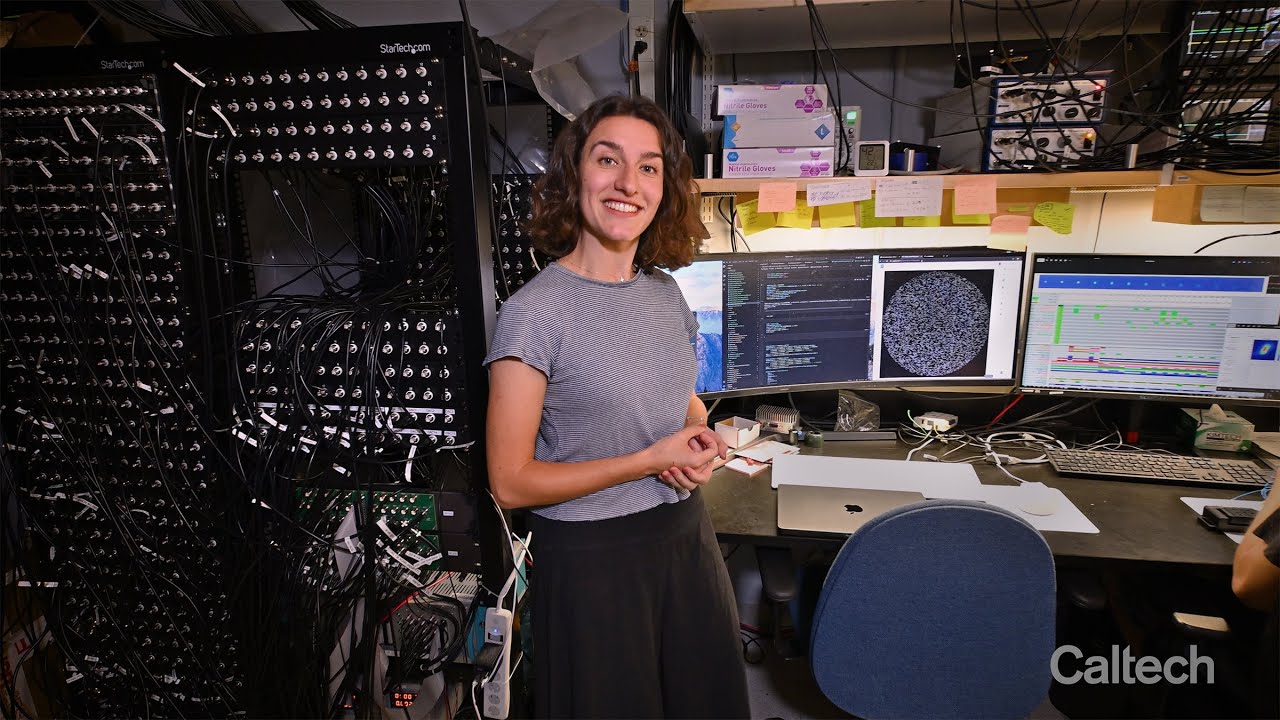

Scientists at Caltech have conducted a record-breaking experiment in which they synchronized 6,100 atoms in a quantum array. This research could lead to more robust, fault-tolerant quantum computers.

In the experiment, they used paired neutral atoms as the quantum bits (qubits) in a system and held them in a state of “superposition” to conduct quantum computations. To achieve this, the scientists split a laser beam into 12,000 "laser tweezers" which together held the 6,100 qubits.

As described in a new study published Sept. 24 in the journal Nature, the scientists not only set a new record for the number of atomic qubits placed in a single array — they also extended the length of "superposition" coherency. This is the amount of time an atom is available for computations or error-checking in a quantum computer — and they boosted that duration from just a few seconds to 12.6.

The study represents a significant step towards large-scale quantum computers capable of technological feats well beyond those of today’s fastest supercomputers, the scientists said in the study. They added that this research represents a key milestone in developing quantum computers that use neutral-atom architecture.

This type of qubit is advantageous because it can operate at room temperature. The most common type of qubits, made from superconducting metals, needs expensive and cumbersome equipment to cool the system down to temperatures close to absolute zero.

The road to quantum advantage

It’s widely believed that the development of useful quantum computers will demand systems with millions of qubits. This is because each functional qubit needs several error-corrected qubits to provide fault tolerance.

Qubits are inherently "noisy," and tend to decohere easily when faced with external factors. As data is transferred through a quantum circuit, this decoherence distorts it, making the data potentially unusable. To counteract this noise, scientists must develop fault-tolerance techniques in tandem with methods for qubit expansion. It's the reason a huge amount of research has so far gone into quantum error correction (QEC).

Get the world’s most fascinating discoveries delivered straight to your inbox.

Many of today’s systems are considered functional, but most wouldn’t meet a minimum threshold for usefulness relative to a supercomputer. Quantum computers built by IBM, Google and Microsoft, for example, have successfully outperformed classical computers and demonstrated what’s often referred to as "quantum advantage."

But this advantage has been largely limited to bespoke computational problems designed to showcase the capabilities of a specific architecture — not practical problems. Scientists hope that quantum computers will become more useful as they scale in size and as the errors that occur in qubits are managed better.

"This is an exciting moment for neutral-atom quantum computing," said lead author Manuel Endres, professor of physics at Caltech and principal investigator on the research, in a statement. "We can now see a pathway to large error-corrected quantum computers. The building blocks are in place."

More notable than the sheer size of the qubit array are the techniques used to make the system scalable, the researchers said in the study. They fine-tuned previous efforts to make approximately 10-fold improvements in key areas such as coherence, superposition and the size of the array. Compared to previous efforts, they scaled from hundreds of qubits in a single array to more than 6,000 while maintaining 99.98% accuracy.

They also showed off a new technique for "shuttling" the array by moving the atoms hundreds of micrometers across the array without losing superposition. It’s possible that, with further development, the use of shuttling could provide a new dimension of instant error-correction, they said.

The team's next steps involve linking the atoms together within the array through a state of quantum mechanics called entanglement, which would lead to full quantum computations. Scientists hope to exploit entanglement to develop stronger fault-tolerance methods with even more accurate error-correction, they added. These techniques could prove crucial to achieving the next milestone on the road to useful, fault-tolerant quantum computers.

Tristan is a U.S-based science and technology journalist. He covers artificial intelligence (AI), theoretical physics, and cutting-edge technology stories.

His work has been published in numerous outlets including Mother Jones, The Stack, The Next Web, and Undark Magazine.

Prior to journalism, Tristan served in the US Navy for 10 years as a programmer and engineer. When he isn’t writing, he enjoys gaming with his wife and studying military history.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Live Science Plus

Live Science Plus