What is Moore’s Law and does this decades-old computing prophecy still hold true?

Moore's Law was an off-hand prediction that came to be one of the prevailing laws of modern computing — but what did it predict, and can we still rely on it?

Get the world’s most fascinating discoveries delivered straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Delivered Daily

Daily Newsletter

Sign up for the latest discoveries, groundbreaking research and fascinating breakthroughs that impact you and the wider world direct to your inbox.

Once a week

Life's Little Mysteries

Feed your curiosity with an exclusive mystery every week, solved with science and delivered direct to your inbox before it's seen anywhere else.

Once a week

How It Works

Sign up to our free science & technology newsletter for your weekly fix of fascinating articles, quick quizzes, amazing images, and more

Delivered daily

Space.com Newsletter

Breaking space news, the latest updates on rocket launches, skywatching events and more!

Once a month

Watch This Space

Sign up to our monthly entertainment newsletter to keep up with all our coverage of the latest sci-fi and space movies, tv shows, games and books.

Once a week

Night Sky This Week

Discover this week's must-see night sky events, moon phases, and stunning astrophotos. Sign up for our skywatching newsletter and explore the universe with us!

Join the club

Get full access to premium articles, exclusive features and a growing list of member rewards.

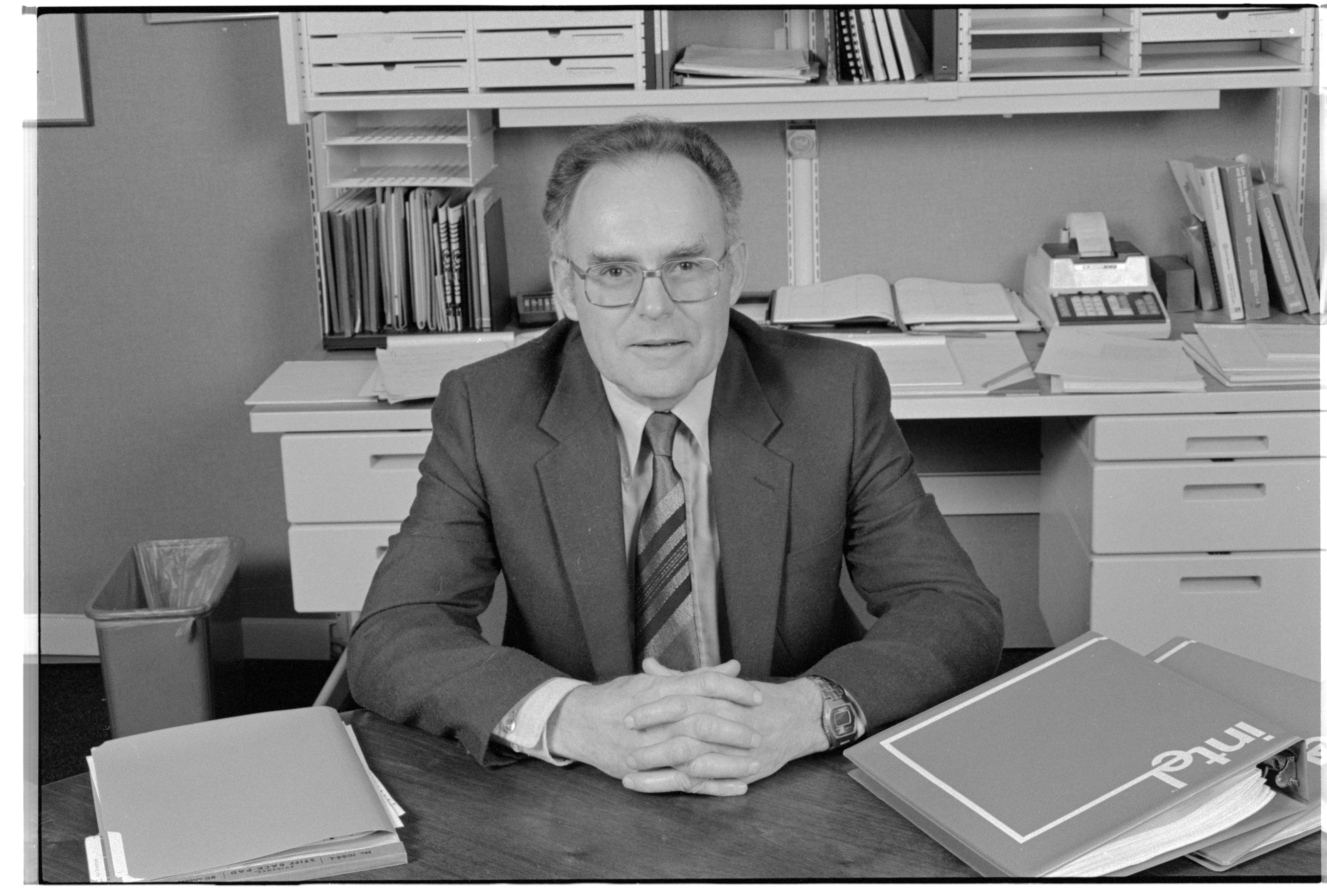

Gordon Moore wasn’t overly enthused when he was asked to write an article to celebrate the 35th anniversary of Electronic Magazine in 1965. "I was given the chore of predicting what would happen in silicon components in the next ten years," he recalled 40 years later. But as Director of R&D at Fairfield Semiconductor, which had developed the breakthrough planar transistor in 1959, he was perfectly placed to assess the progress that had been made in six short years.

In particular, Moore noticed that Fairfield had doubled the number of transistors that could be placed on a chip each year — being able to squeeze 60 where there had once been two. He then "blindly extrapolated for about ten years and said, okay, in 1975 we’ll have about 60 thousand components on a chip." In other words, every year the number had doubled and Moore thought it would continue to double. His prediction was neat and easy to understand — but most of all, it worked.

The idea was quickly dubbed Moore’s Law, and it mostly held true until 1975. (To be strictly accurate, the number doubled nine times over ten years rather than ten times over ten years). Seeing complications further down the line, Moore revised his prediction to a doubling every two years, and remarkably, his prediction once again proved to be (roughly) accurate for the next 40 years.

His only mistake is that the doubling rate was actually faster — doubling every 21 months on average.

Moore’s self-fulfilling prophecy

One reason for the success of Moore’s prediction is that it became a guide — almost a target — for chip designers. This was especially the case for Intel, the company that Gordon Moore co-founded with Robert Noyce in 1968. Moore and Noyce, one of the engineers behind the planar process, saw a potential in integrated circuits that the recession-hit and cautious Fairfield did not.

In 1971, Intel would have its first big hit: the 4004 microprocessor. It included 2,300 transistors measuring 10 microns thick — five times slimmer than a strand of human hair. A little over ten years later, Intel introduced the 80286 processor, with 134,000 transistors each measuring 1.5 microns (because of this, it’s referred to as a "1.5-micron process"). These developments emerged very much in line with the revised Moore’s Law.

When looking back over the years that followed — the 1980s, 1990s and early 2000s — it may seem like the path of progress was smooth. Moore’s Law kept holding, after all. But that was only possible due to a series of major breakthroughs, each solving a problem that at one time seemed impossible.

Get the world’s most fascinating discoveries delivered straight to your inbox.

Related: Light-powered computer chip can train AI much faster than components powered by electricity

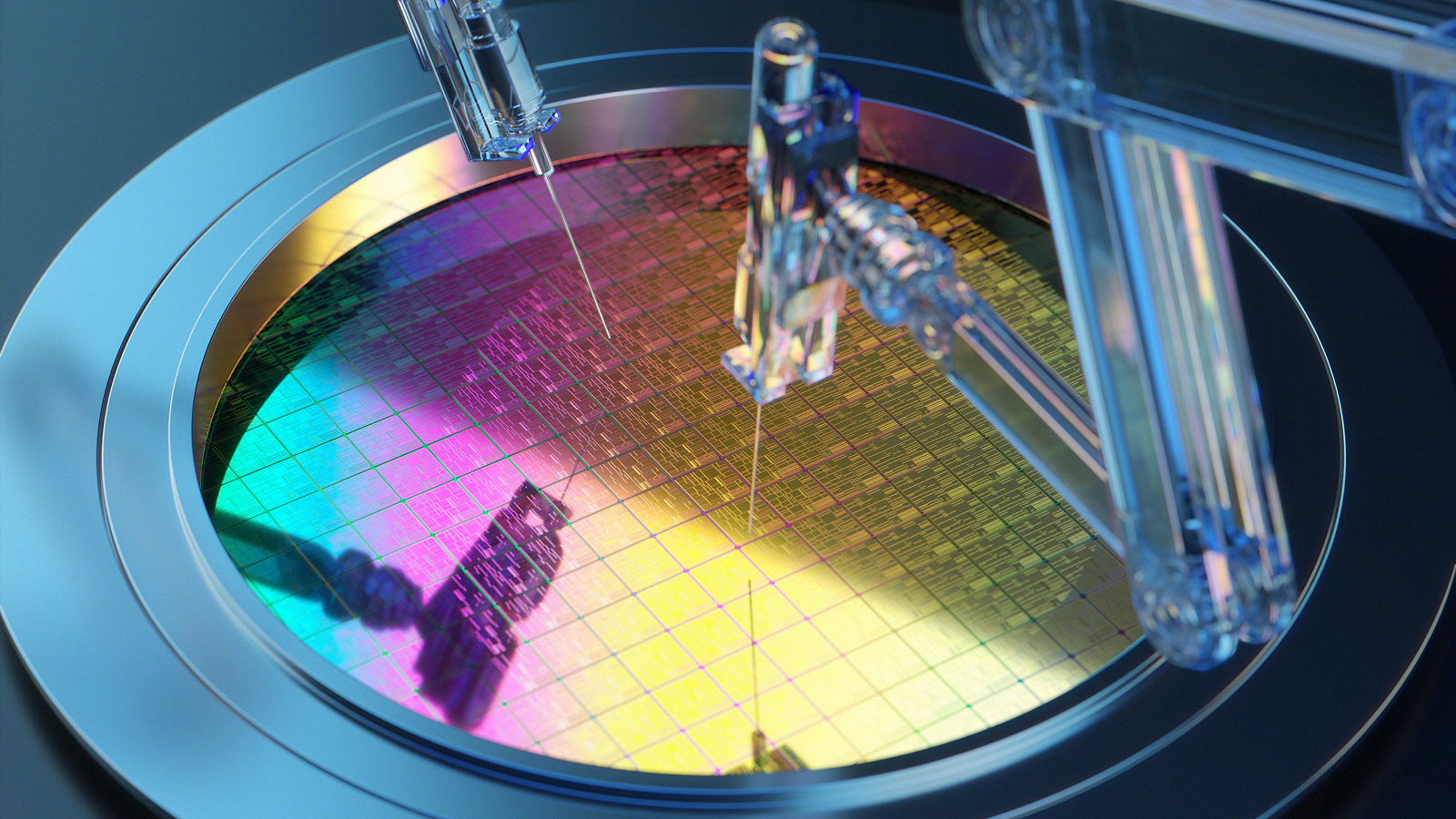

Some were based on material science, such as improving the "doping" methods that insert impurities into a semiconductor to better control its conductivity. Or the creation of complementary metal oxide superconductor (CMOS) technology in the mid-1980s, which brought lower power consumption and thus less heat. Other breakthroughs came in the manufacturing process, such as the development of extreme ultraviolet lithography (EUV) to etch patterns onto ever smaller wafers.

And the innovations didn’t stop. We mentioned the planar processor, where transistors sit on a level plane, right at the start of this article. It took years of research and development — (four Japanese researchers at Delta created the first vertical design for a processor in 1989) — but when it arrived, the vertical FinFET processor gave Moore’s Law fresh life in 2012 in the form of Intel’s third-generation Core i3, i5 and i7 processors. These used a 22nm processor and packed up to 1.4 billion processors.

These are just a handful of the innovations that Gordon Moore could never have foreseen yet enabled his law to hold true. But there was one seemingly impossible problem looming on the horizon — physics.

Why smaller isn't always better

A strand of hair is around 50 microns thick. A mote of dust around five microns. A bacterial cell, such as Mycoplasma, measures 0.5 microns. Now, consider that modern transistors are often 0.005 microns thick, or 5 nanometers (5nm) and you’ll realise we’re approaching atomic levels. We mean that literally: the space between the centre of two adjacent silicon atoms is around 0.235 nanometers, so you can squeeze around 21 into a 5nm space.

Then consider that the latest CPU manufacturing processes have reduced yet further, from 5nm to 2nm, meaning space for eight silicon atoms. At this point, we begin to reach the point where quantum mechanical effects prevail, such as quantum tunneling, which causes electrons leak. This is not a property you want in a transistor.

All of this means that the straightforward approach of "make things smaller" no longer works on its own. That’s why we have seen a shift away from miniaturization and instead towards more sophisticated processors, with every chip in every device you own now including many different cores so that tasks can be split between them.

Is Moore’s Law still relevant?

In the simplest sense, no. The days when we could double the number of transistors on a chip every two years are far behind us. However, Moore’s Law has acted as a pacesetter in a decades-long race to create chips that perform more complicated tasks quicker, especially as our expectations for continual progress continue.

To measure its success, consider that if Moore’s Law had suggested a doubling every 10 years instead of every two, then we would be stuck with 1980s-era computers. Steve Jobs would never have been able to announce the iPhone in 2007, establishing the smartphone era.

This pace-setting is something that’s now demanded not only by consumers, but the boards of technology companies. It’s one of the drivers behind the neural processing units (NPUs) inside recent processors, capable of running local artificial intelligence (AI) tasks that are beyond the reach of conventional CPUs. For now, this technology can perform simple tasks such as removing unwanted people from the background of our photos, but this is just the beginning.

NPUs are big news today, as are the incredible Nvidia-powered equivalents in data centers that drive ChatGPT, Midjourney and the other AI services we are gradually coming to rely on. Meanwhile, it seems that personal AI assistants are a heartbeat away, with even bigger leaps likely to come in the next decade.

We can’t yet be sure what those leaps will entail. What we can say is that developments are currently happening in university research labs and R&D divisions in megacorporations such as Intel. One of those labs might yet work out a way to cram yet more transistors into even smaller areas — or perhaps move away from transistors altogether — but that seems unlikely.

Instead, Moore’s Law lives on as an expectation of the pace of progress. An expectation that every tech company from DeepSeek to Meta to OpenAI will continue to use as their guide.

Tim Danton is a journalist and editor who has been covering technology and innovation since 1999. He is currently the editor-in-chief of PC Pro, one of the U.K.'s leading technology magazines, and is the author of a computing history book called The Computers That Made Britain. He is currently working on a follow-up book that covers the very earliest computers, including The ENIAC. His work has also appeared in The Guardian, Which? and The Sunday Times. He lives in Buckinghamshire, U.K.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Live Science Plus

Live Science Plus