What are neural processing units (NPUs) and why are they so important to modern computing?

Neural processing unts (NPUs) are the latest chips you might find in smartphones and laptops — but what are they ard why are they so important?

Get the world’s most fascinating discoveries delivered straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Delivered Daily

Daily Newsletter

Sign up for the latest discoveries, groundbreaking research and fascinating breakthroughs that impact you and the wider world direct to your inbox.

Once a week

Life's Little Mysteries

Feed your curiosity with an exclusive mystery every week, solved with science and delivered direct to your inbox before it's seen anywhere else.

Once a week

How It Works

Sign up to our free science & technology newsletter for your weekly fix of fascinating articles, quick quizzes, amazing images, and more

Delivered daily

Space.com Newsletter

Breaking space news, the latest updates on rocket launches, skywatching events and more!

Once a month

Watch This Space

Sign up to our monthly entertainment newsletter to keep up with all our coverage of the latest sci-fi and space movies, tv shows, games and books.

Once a week

Night Sky This Week

Discover this week's must-see night sky events, moon phases, and stunning astrophotos. Sign up for our skywatching newsletter and explore the universe with us!

Join the club

Get full access to premium articles, exclusive features and a growing list of member rewards.

Ever since the dawn of computing, people have compared machines to brains. This includes two founding fathers of computing — John von Neumann wrote a book called "The Computer and the Brain" while Alan Turing was quoted in 1949 saying: "Eventually I do not see why [a computer] may not compete on equal terms with the human intellect in most fields."

The only problem with this comparison is that the traditional processor — the central processing unit (CPU) — doesn’t mimic the brain at all. CPUs are far too mathematical and logical. The neural processing unit (NPU), on the other hand, takes an entirely different approach: simulating the structure of the human brain in its very circuitry.

Yet mimicking the workings of the human brain electronically is far from a new idea.

The birth of NPUs

Literal electronic brains date back to the birth of modern-day computing in the mid-1940s, specifically to a "neural network" of circuitry created by neurophysiologist Warren McCulloch and logician Walter Pitts. McCulloch’s pioneering work spurred further research during the 1950s and 1960s, only for the idea to fall out of fashion — perhaps due to a lack of progress compared to the rising number-crunching power of classical computers.

“There were a few isolated people in Japan and Germany [working on neural networks] but it was not a field,” Yann LeCun, a French-American computer scientist widely considered one of ‘godfathers’ of AI, said of his time working with Geoffrey Hinton, another of the field’s pioneers, on neural networks in the early 1980s. “It started being a field again in 1986.”

Yet for neural networks to regain their foothold as a respected part of computer science, it took the success of speech recognition in the early 2000s., Even then, LeCun said: “We didn’t want to use the word neuron nets because it had a bad reputation, so we changed the name to deep learning.”

Related: Light-powered computer chip can train AI much faster than components powered by electricity

Get the world’s most fascinating discoveries delivered straight to your inbox.

The term NPUs would come in the late 1990s, but it has taken the deep pockets of Apple, IBM and Google to move it from university labs and into the mainstream. These tech companies invested billions of dollars into the development of silicon, crystallizing all the past work into a product that fits inside our phones and laptops: a processor that takes inspiration from the human brain. LeCun’s fortunes have also improved for the better: he is now chief AI scientist at Meta.

How do NPUs work?

In some ways, the NPUs of today aren’t that different from those created by McCulloch and Pitts: their structure mimics the brain through a parallel architecture. This means that rather than tackling a problem in sequence, an NPU will simultaneously run millions, even trillions, of mini computations simultaneously. This is what the term "tera operations per second," or TOPS, refers to.

But here’s where things get complicated. NPUs rely on deep learning instruction sets, which have already been trained on vast amounts of existing data. Take the example of edge detection in photos, which usually relies on convolutional neural networks (CNNs).

In a CNN, the convolution layer runs a filter (called a "kernel") over every area of the image, which will hunt for patterns that it suspects — thanks to its training — are edges. Each mathematical operation the NPU performs is called a convolution, which creates a feature map over the image. The software will repeat this process until it reaches the point where it is confident it has found edges.

NPUs are outstanding at performing convolutionary operations, being able to execute them at great speed and with low power demands. This is especially true when compared to CPUs. However, graphics processing units (GPUs), which also use parallel processing, are less optimized for this task and therefore less efficient. This drop in efficiency makes a big difference when it comes to the battery life of our devices.

What are NPUs now used for?

Perhaps surprisingly, the first phones to include an NPU date back to 2017. That’s when Huawei released the Mate 10 and Apple debuted its A11 Bionic chipset in the iPhone X. But neither of these NPUs was very powerful — each having less than 1 TOPS compared to the 45 TOPS NPUs in a modern-day Qualcomm Snapdragon X chipset, fitted into our best laptops. It has also taken several years for applications to appear that can take advantage of the chips’ unique structure.

Yet just eight years later, AI applications are everywhere. For example, if you own a recent phone that includes the option to remove people from photos — that almost certainly uses an NPU. Likewise, Google’s "Circle to Search" feature, or "Add Me" uses a NPU-powered form of augmented reality (AR) to place you in the photo after you’ve already taken the original shot.

NPUs have now spread to laptops too. Last year, Microsoft announced "a new category of Windows PCs designed for AI, Copilot+ PCs." These required NPUs with at least 40 TOPS, which unfortunately for AMD and Intel (whose early NPUs only ran at 15 TOPS), ruled them out of the race. But their loss was Qualcomm’s gain, as all of its Snapdragon X processors exceeded that threshold with NPUs rated at 45 TOPS. Models that take advantage of these new chips include the Microsoft Surface Laptop and Snapdragon versions of the Acer Swift AI series.

Both AMD and Intel have now released chips that meet Microsoft’s minimum requirements, meaning far more laptops are on the market with the "Copilot+ PC" branding. Yet there’s a sting in this tail: more affordable laptops ( less than $800) are now likely to still use lesser processors that don’t meet the Copilot+ PC criteria.

What are the best Copilot+ PC features?

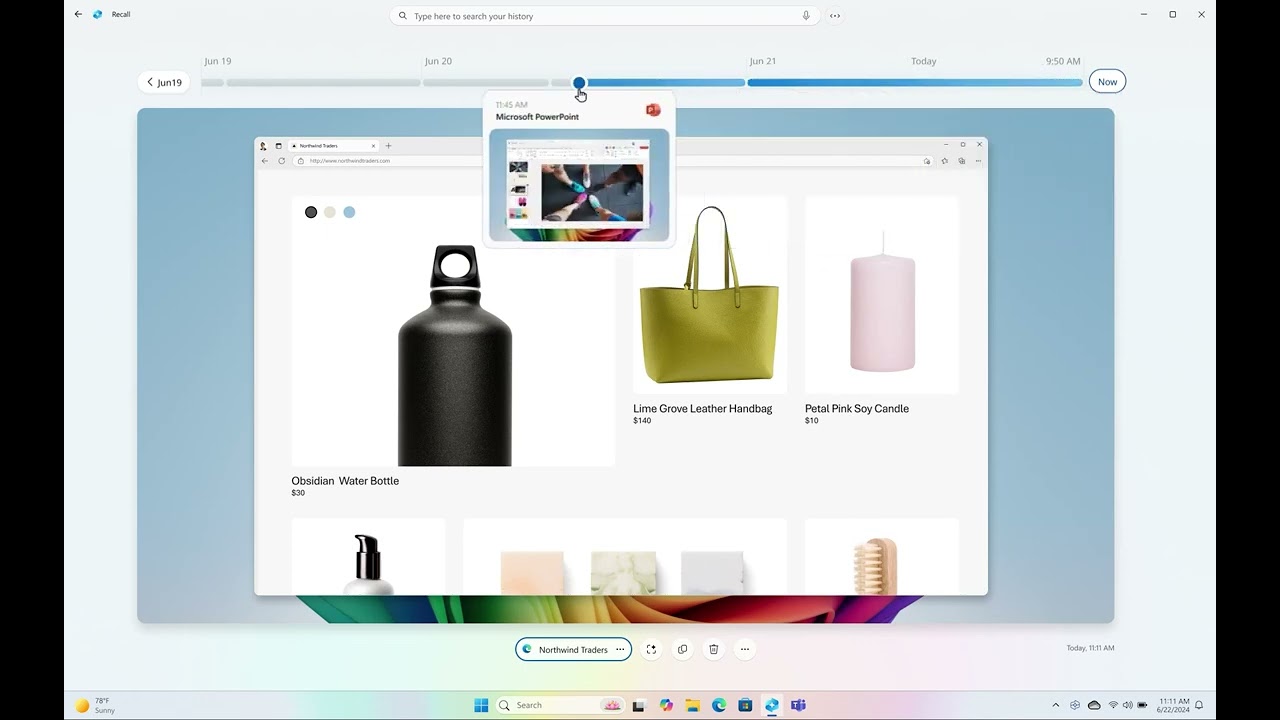

But why should you pay more for a Copilot+ PC? Microsoft hopes to tempt you with a number of exclusive features, and frankly, the most impressive one is also the most controversial. Called Recall, this promises a "photographic memory" that enables you to rediscover something you’ve previously seen in Windows 11.

Each snapshot taken by Recall is analysed by the NPU, using context, optical character recognition (OCR) and sentiment analysis to create an index that you can then search — at which point Recall will take you back in time through a visual timeline. After a shaky launch, where it was attacked for the lack of security or user control over what snapshots were stored, Microsoft said it spent more time reworking the feature to be more secure.

Other features build upon what has come before. Image Creator uses the NPU to turn text into images, an enhanced version of Windows Studio Effects adds creative filters to your video calls and Live Captions deploys the NPU to translate any video you’re watching.

Companies like Acer, HP and Lenovo have released their own local AI tools that can analyse documents stored on your PC and provide summaries and sentiment analysis. Such tools are only likely to improve over time.

What’s likely to happen next with NPUs?

For the next few years, some AI experts contend that NPUs will follow a similar path to CPUs in their early days — something close to Moore’s Law, with a doubling of TOPS every year or two. With that power will come far greater abilities, to the point where you can create realistic AI artwork locally on your computer rather than resorting to programs such as Midjourney.

Over time, as software matures along with the hardware, and more developers take advantage of it, we expect to see the emergence of personal AI agents that understand us because they have been "living" inside our computers as we work. They won’t just serve as memory joggers but perform actions on our behalf.

NPUs will also likely find a home in more devices than our phones and laptops. TVs will produce personalized news services using your favourite avatar presenter; your fitness tracker will recommend workouts based on your mood and the time until your next meeting. Who knows, your best friend may one day be a humanoid robot who understands you better than any human.

Tim Danton is a journalist and editor who has been covering technology and innovation since 1999. He is currently the editor-in-chief of PC Pro, one of the U.K.'s leading technology magazines, and is the author of a computing history book called The Computers That Made Britain. He is currently working on a follow-up book that covers the very earliest computers, including The ENIAC. His work has also appeared in The Guardian, Which? and The Sunday Times. He lives in Buckinghamshire, U.K.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Live Science Plus

Live Science Plus