Deepfake AI: Our Dystopian Present

Reference Article: Facts about deepfake AI.

Get the world’s most fascinating discoveries delivered straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Delivered Daily

Daily Newsletter

Sign up for the latest discoveries, groundbreaking research and fascinating breakthroughs that impact you and the wider world direct to your inbox.

Once a week

Life's Little Mysteries

Feed your curiosity with an exclusive mystery every week, solved with science and delivered direct to your inbox before it's seen anywhere else.

Once a week

How It Works

Sign up to our free science & technology newsletter for your weekly fix of fascinating articles, quick quizzes, amazing images, and more

Delivered daily

Space.com Newsletter

Breaking space news, the latest updates on rocket launches, skywatching events and more!

Once a month

Watch This Space

Sign up to our monthly entertainment newsletter to keep up with all our coverage of the latest sci-fi and space movies, tv shows, games and books.

Once a week

Night Sky This Week

Discover this week's must-see night sky events, moon phases, and stunning astrophotos. Sign up for our skywatching newsletter and explore the universe with us!

Join the club

Get full access to premium articles, exclusive features and a growing list of member rewards.

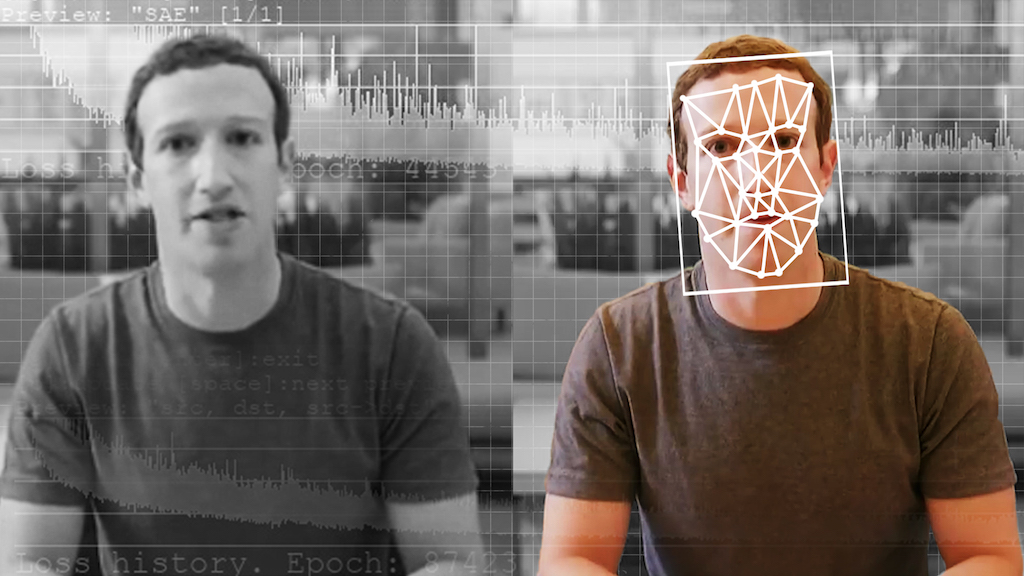

Of all the scary powers of the internet, it's ability to trick the unsuspecting might be the most frightening. Clickbait, photoshopped pictures and false news are some of the worst offenders, but recent years have also seen the rise of a new potentially dangerous tool known as deepfake artificial intelligence (AI).

The term deepfake refers to counterfeit, computer-generated video and audio that is hard to distinguish from genuine, unaltered content. It is to film what Photoshop is for images.

How does deepfake AI work?

The tool relies on what's known as generative adversarial networks (GANs), a technique invented in 2014 by Ian Goodfellow, a Ph.D. student who now works at Apple, Popular Mechanics reported.

The GAN algorithm involves two separate AIs, one that generates content — let's say, photos of people — and an adversary that tries to guess whether the images are real or fake, according to Vox. The generating AI starts off with almost no idea how people look, meaning its partner can easily distinguish true photos from false ones. But over time, each type of AI get progressively better, and eventually the generating AI begins producing content that looks perfectly life-like.

Related: Read the latest news and features on artificial intelligence.

Deepfake examples

GANs are impressive tools and aren't always used for malicious purposes. In 2018, a GAN-generated painting imitating the Dutch "Old Master" style of artists like the 17th-century Rembrandt van Rijn sold at Christie's auction house for an incredible $432,500.

But when GANs are trained on video and audio files, they can produce some truly disturbing material. In 2017, researchers from the University of Washington in Seattle trained an AI to change a video of former president Barack Obama, so that his lips moved in accord with words from a totally different speech. The work was published in the journal ACM Transactions on Graphics (TOG).

Get the world’s most fascinating discoveries delivered straight to your inbox.

That same year, deepfakes rose to widespread prominence, mainly through a Reddit user who went by the name 'deepfakes,' Vice reported. The GAN technique was often being used to place the faces of famous celebrities – including Gal Gadot, Maisie Williams and Taylor Swift – onto the bodies of pornographic-film actresses.

Other GANs have learned to take a single image of a person and create fairly realistic alternative photos or videos of that person. In 2019, a deepfake could generate creepy but realistic movies of the Mona Lisa talking, moving and smiling in different positions.

Related: Why Does Artificial Intelligence Scare Us So Much?

Deepfakes can also alter audio content. As reported by The Verge earlier this year, the technique can splice new words into a video of a person talking, making it appear that they said something that they never intended to.

The ease with which the new tool can be deployed has potentially frightening ramifications. If anyone, anywhere can make realistic films showing a celebrity or politician speaking, moving and saying words they never said, viewers are forced to become more wary of content on the internet. As an example, just listen to President Obama warning against an "effed-up" dystopian future in this video from Buzzfeed, created using deepfake by filmmaker Jordan Peele.

Additional resources:

- Learn how you can help Google with its deepfake detection research.

- Watch: "Deepfake Videos Are Getting Terrifyingly Real," from NOVA PBS.

- Read more about deepfake AI and possible legal solutions from The Brookings Institution.

Adam Mann is a freelance journalist with over a decade of experience, specializing in astronomy and physics stories. He has a bachelor's degree in astrophysics from UC Berkeley. His work has appeared in the New Yorker, New York Times, National Geographic, Wall Street Journal, Wired, Nature, Science, and many other places. He lives in Oakland, California, where he enjoys riding his bike.

Live Science Plus

Live Science Plus