Freaky Robot Is a Real Einstein

Get the world’s most fascinating discoveries delivered straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Delivered Daily

Daily Newsletter

Sign up for the latest discoveries, groundbreaking research and fascinating breakthroughs that impact you and the wider world direct to your inbox.

Once a week

Life's Little Mysteries

Feed your curiosity with an exclusive mystery every week, solved with science and delivered direct to your inbox before it's seen anywhere else.

Once a week

How It Works

Sign up to our free science & technology newsletter for your weekly fix of fascinating articles, quick quizzes, amazing images, and more

Delivered daily

Space.com Newsletter

Breaking space news, the latest updates on rocket launches, skywatching events and more!

Once a month

Watch This Space

Sign up to our monthly entertainment newsletter to keep up with all our coverage of the latest sci-fi and space movies, tv shows, games and books.

Once a week

Night Sky This Week

Discover this week's must-see night sky events, moon phases, and stunning astrophotos. Sign up for our skywatching newsletter and explore the universe with us!

Join the club

Get full access to premium articles, exclusive features and a growing list of member rewards.

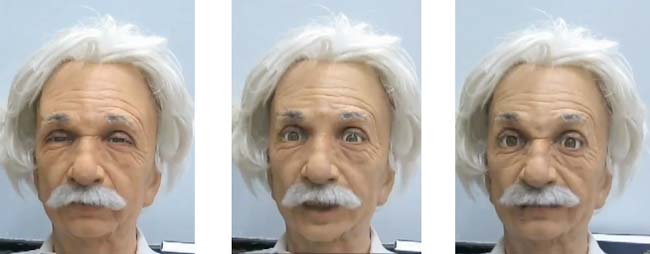

Albert Einstein is practically alive and smiling in the guise of a new robot that looks eerily like the great scientist and generates facial expressions that take robotics to a new level.

"As far as we know, no other research group has used machine learning to teach a robot to make realistic facial expressions," said Tingfan Wu, a computer science graduate student from the University of California, San Diego (UCSD).

To teach this, the team connected tiny motors via strings to about 30 of the robot's facial muscles. Programmers then directed the Albert Einstein head to twist and turn its face in numerous directions, a process called "body babbling," which is similar to the random movements that infants use when learning to control their bodies and reach for objects.

The researchers made some video of robot's expressions that are disturbingly lifelike.

Story continues below ...

{{ video="LS_090710_einstein" title="Hey, Einstein! Is That You?" caption="A hyper-realistic Einstein robot at the University of California, San Diegornhas learned to smile and make facial expressions through a process ofrnself-guided learning. [Story] Credit: UCSD" }}

During body babbling, the Einstein robot could see itself in a mirror and analyze its movements using facial expression detection software created at UCSD and called CERT (Computer Expression Recognition Toolbox). The robot used this data as an input for its machine learning algorithms and created a map between its facial expressions and the movements of its muscle motors.

Get the world’s most fascinating discoveries delivered straight to your inbox.

After creating the basic facial expression map, the robot learned to make new facial expressions — ranging from angry to surprised to sad — that it had never encountered before. For instance, the Einstein robot learned to narrow its eyebrows, where the inner eyebrows move together and upper eyelids close slightly

The robot head probably isn't realistic enough to pass for a real-life Einstein, and the researchers noted that some of the robot's facial expressions are still awkward.

The interactions between facial muscles and skin in humans are almost certainly more complex than their robotic model, Wu said. Currently, a trained person must manually configure the motors to pull the facial muscles in the right combinations so that the body babbling can occur. In the future, the team hopes to automate more of the process.

Still, the researchers hope their work will lead to insight into how humans learn facial expressions. The researchers admit that the body babbling method may not be the most efficient way for a robot to learn to smile and frown, so they are exploring other approaches too.

"Currently, we are working on a more accurate facial expression generation model, as well as a systematic way to explore the model space efficiently," Wu said.

Wu's findings were presented on June 6 at the IEEE International Conference on Development and Learning in Shanghai, China. Javier Movellan, director of UCSD's Machine Perception Laboratory, was the senior author on the presented research.

- Video: See the Robot Einstein

- Vote: Is Einstein the Greatest Modern Mind?

- 5 Reasons to Fear Robots

Live Science Plus

Live Science Plus