'Killer Robot' Lab Faces Boycott from Artificial Intelligence Experts

The artificial intelligence (AI) community has a clear message for researchers in South Korea: Don't make killer robots.

Nearly 60 AI and robotics experts from almost 30 countries have signed an open letter calling for a boycott against KAIST, a public university in Daejeon, South Korea, that has been reported to be "develop[ing] artificial intelligence technologies to be applied to military weapons, joining the global competition to develop autonomous arms," the open letter said.

In other words, KAIST might be researching how to make military-grade AI weapons. [5 Reasons to Fear Robots]

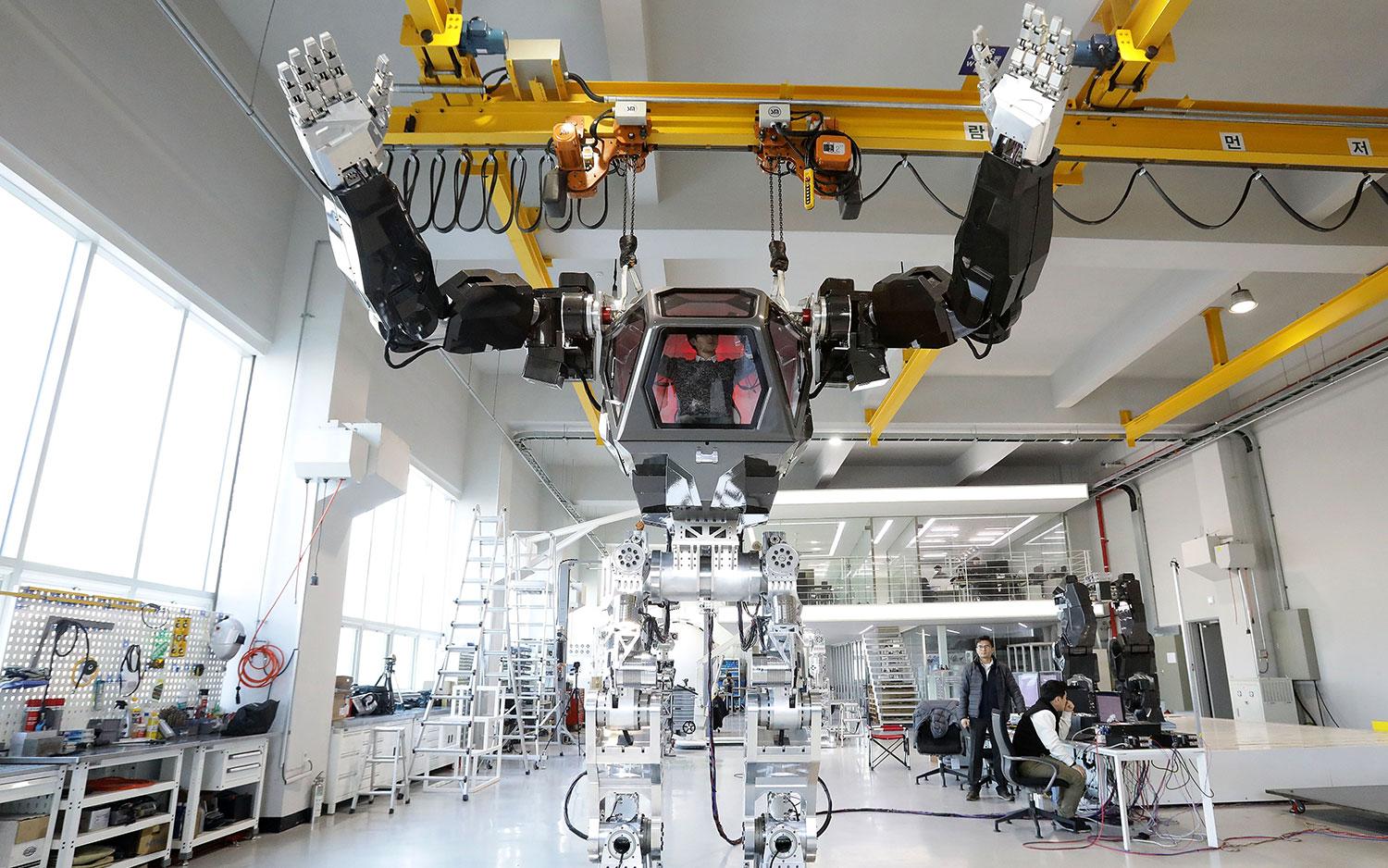

According to the open letter, AI experts the world over became concerned when they learned that KAIST — in collaboration with Hanwha Systems, South Korea's leading arms company — opened a new facility on Feb. 20 called the Research Center for the Convergence of National Defense and Artificial Intelligence.

Given that the United Nations (U.N.) is already discussing how to safeguard the international community against killer AI robots, "it is regrettable that a prestigious institution like KAIST looks to accelerate the arms race to develop such weapons," the researchers wrote in the letter.

To strongly discourage KAIST's new mission, the researchers are boycotting the university until its president makes clear that the center will not develop "autonomous weapons lacking meaningful human control," the letter writers said.

This boycott will be all-encompassing. "We will, for example, not visit KAIST, host visitors from KAIST or contribute to any research project involving KAIST," the researchers said.

Get the world’s most fascinating discoveries delivered straight to your inbox.

If KAIST continues to pursue the development of autonomous weapons, it could lead to a third revolution in warfare, the researchers said. These weapons "have the potential to be weapons of terror," and their development could encourage war to be fought faster and on a greater scale, they said.

Despots and terrorists who acquire these weapons could use them against innocent populations, removing any ethical constraints that regular fighters might face, the researchers added.

Such a ban against deadly technologies isn't new. For instance, the Geneva Conventions prohibit armed forces from using blinding laser weapons directly against people, Live Science previously reported. In addition, nerve agents such as sarin and VX are banned by the Chemical Weapons Convention, in which more than 190 nations participate.

However, not every country agrees to blanket protections such as these. Hanwha, the company partnering with KAIST, helps produce cluster munitions. Such munitions are prohibited under the U.N. Convention on Cluster Munitions, and more than 100 nations (although not South Korea) have signed the convention against them, the researchers said.

Hanwha has faced repercussions for its actions; based on ethical grounds, Norway's publically distributed $380 billion pension fund does not invest in Hanhwa's stock, the researchers said.

Rather than working on autonomous killing technologies, KAIST should work on AI devices that improve, not harm, human lives, the researchers said.

Meanwhile, other researchers have warned for years against killer AI robots, including Elon Musk and the late Stephen Hawking.

Original article on Live Science.

Laura is the managing editor at Live Science. She also runs the archaeology section and the Life's Little Mysteries series. Her work has appeared in The New York Times, Scholastic, Popular Science and Spectrum, a site on autism research. She has won multiple awards from the Society of Professional Journalists and the Washington Newspaper Publishers Association for her reporting at a weekly newspaper near Seattle. Laura holds a bachelor's degree in English literature and psychology from Washington University in St. Louis and a master's degree in science writing from NYU.

Live Science Plus

Live Science Plus