Stephen Hawking Thinks These 3 Things Could Destroy Humanity

Get the world’s most fascinating discoveries delivered straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Join the club

Get full access to premium articles, exclusive features and a growing list of member rewards.

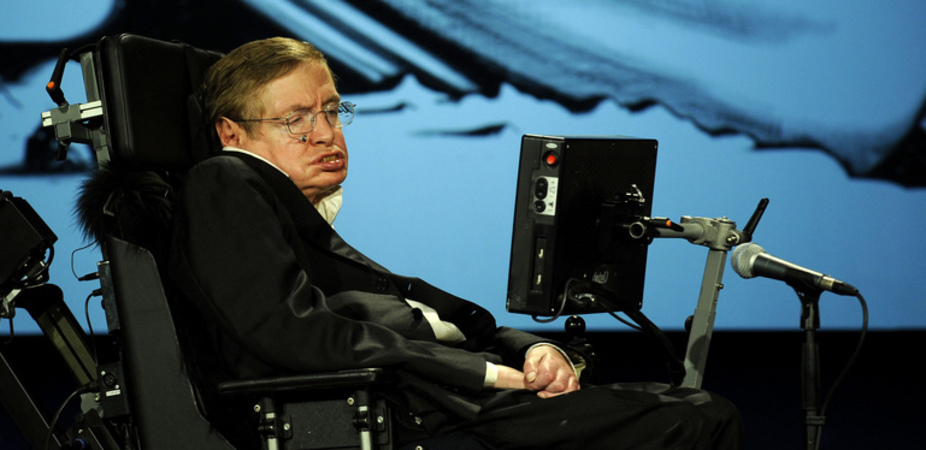

Stephen Hawking may be most famous for his work on black holes and gravitational singularities, but the world-renowned physicist has also become known for his outspoken ideas about things that could destroy human civilization.

Hawking suffers from a motor neuron disease similar to amyotrophic lateral sclerosis, or ALS, which left him paralyzed and unable to speak without a voice synthesizer. But that hasn't stopped the University of Cambridge professor from making proclamations about the wide range of dangers humanity faces — including ourselves.

Here are a few things Hawking has said could bring about the demise of human civilization. [End of the World? Top Doomsday Fears]

Artificial intelligence

Hawking is part of a small but growing group of scientists who have expressed concerns about "strong" artificial intelligence (AI) — intelligence that could equal or exceed that of a human.

"The development of full artificial intelligence could spell the end of the human race," Hawking told the BBC in December 2014. The statement was in response to a question about a new AI voice-synthesizing system that Hawking has been using.

Hawking's warnings echo those of billionaire entrepreneur Elon Musk, CEO of SpaceX and Tesla Motors, who has called AI humanity's "biggest existential threat." Last month, Hawking, Musk and dozens of other scientific bigwigs signed an open letterdescribing the risks, as well as the benefits, of AI.

Get the world’s most fascinating discoveries delivered straight to your inbox.

"Because of the great potential of AI, it is important to research how to reap its benefits while avoiding potential pitfalls," the scientists wrote in the letter, which was published online Jan. 11 by the Future of Life Institute, a volunteer organization that aims to mitigate existential threats to humanity.

But many AI researchers say humanity is nowhere near being able to develop strong AI.

"We are decades away from any technology we need to worry about," Demis Hassabis, an artificial intelligence researcher at Google DeepMind, told reporters this week at a news conference about a new AI program he developed that can teach itself to play computer games. Still, "It's good to start the conversation now," he added.

Human aggression

If our machines don't kill us, we might kill ourselves. Hawking now believes that human aggression might destroy civilization.

The physicist was giving a tour of the London Science Museum to Adaeze Uyanwah, a 24-year-old teacher from California who won a contest from VisitLondon.com. When Uyanwah asked, "What human shortcomings would you most like to alter?" Hawking responded:

"The human failing I would most like to correct is aggression. It may have had survival advantage in caveman days, to get more food, territory or partner with whom to reproduce, but now it threatens to destroy us all," The Independent reported.

For example,a major nuclear war would likely end civilization, and could wipe out the human race, Hawking added. When asked which human quality he would most like to magnify, Hawking chose empathy, because "it brings us together in a peaceful, loving state."

Hawking thinks space exploration will be important to ensuring the survival of humanity. "I believe that the long-term future of the human race must be space, and that it represents an important life insurance for our future survival, as it could prevent the disappearance of humanity by colonizing other planets," Cambridge Newsreported.

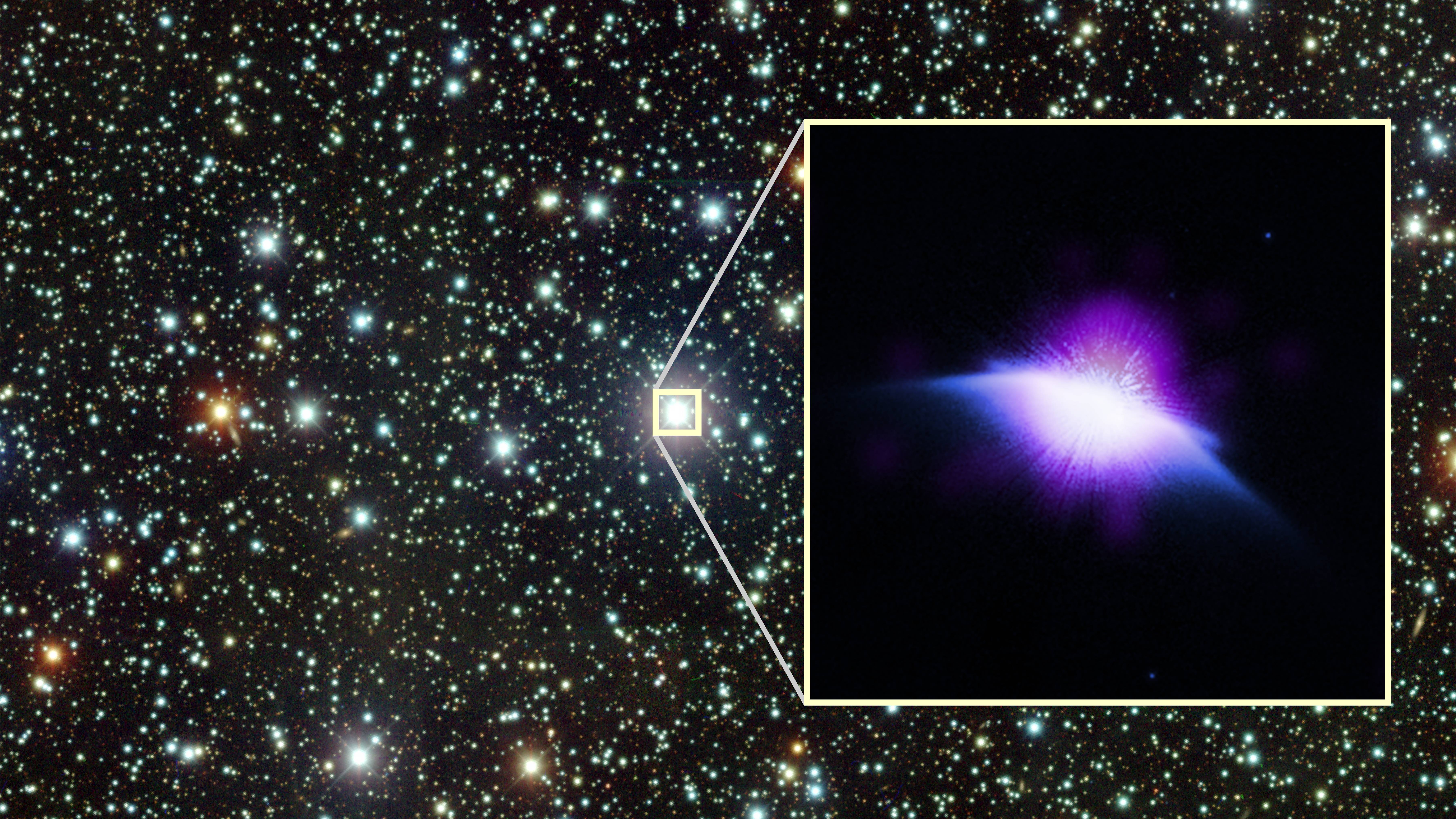

Alien life

But Hawking had made ominous warnings even before these recent ones. Back in 2010, Hawking said that, if intelligent alien life exists, it may not be that friendly toward humans.

"If aliens ever visit us, I think the outcome would be much as when Christopher Columbus first landed in America, which didn't turn out very well for the Native Americans," Hawking said during an episode of "Into the Universe with Stephen Hawking," a show hosted by the Discovery Channel, reported The Times, a U.K.-based newspaper.

Advanced alien civilizations might become nomads, looking to conquer and colonize whatever planets they could reach, Hawking said. "If so, it makes sense for them to exploit each new planet for material to build more spaceships so they could move on. Who knows what the limits would be?"

From the threat of nefarious AI, to advanced aliens, to hostile humans, Hawking's outlook for humanity is looking pretty grim.

Follow Tanya Lewis on Twitter. Follow us @livescience, Facebook & Google+. Original article on Live Science.

Live Science Plus

Live Science Plus