Machine That Feels May Usher in 'Jedi' Prosthetics

A new method of feeling without touching may allow people with paralyzed or lost limbs to interact with the world using sophisticated prosthetic devices that send sensations directly to the brain.

The method, tested only in monkeys so far, is "a major milestone" for neural prosthetics, according to study researcher Miguel Nicolelis, a physician and neurobiologist at Duke University Medical Center. Neural prosthetics are robotic limbs or exoskeleton-like devices controlled only by nerve signals. Nicolelis and other researchers plan to test these devices in humans within the next one to three years.

"I like to say that we actually liberated the brain from the physical limits of the monkey's body," Nicolelis told LiveScience. "He can move and feel using the brain only." [The Future Is Here: Cyborgs Walk Among Us]

Movement and sensation

Researchers worldwide are hard at work developing devices that would work a bit like Luke Skywalker's prosthetic hand in the 1980 film "The Empire Strikes Back." After losing his hand in a light-saber duel, the fictional Jedi gets a new limb with all the functionality of his original hand.

"He gets his arm chopped off, and an hour later, they put a prosthetic limb on him and start poking the arm, and he experiences those pokes as if it were a real limb," said Sliman Bensmaia, a sensory researcher at the University of Chicago who was not involved in Nicolelis' study.

The closest thing to Skywalker's hand today is the Defense Advance Research Project Agency's (DARPA) brain-controlled robotic arm, which is scheduled for human testing in about a year. The arm can bend and twist much like a natural limb and is controlled by electrodes implanted into the brain. The electrodes translate electrical activity from brain cells into commands for the arm, transmitted via wireless signal. [Bionic Humans: Top 10 Technologies]

Get the world’s most fascinating discoveries delivered straight to your inbox.

But the trick to getting devices like the DARPA arm to work, Bensmaia said, is getting the false limb to talk back to the brain. An arm, for example, can move in so many directions and take so many forms that it's simply not possible to control such movements efficiently based on sight alone. You need to be able to feel what the arm is doing. But while scientists have made great strides in hooking brain signals up to robotics to create motor movement, the sensory side has lagged behind.

"For every one of us working on it, there are 10 people working on the motor side," Bensmaia said.

A big challenge, Nicolelis said, is that these devices use electrodes in the brain to stimulate neurons. Electricity is a rather blunt way to get the brain moving compared with the intricacy of our sensory receptors, and sending electrical sensory signals to the brain while trying to extract electrical motor signals can scramble both signals, leaving a big mess.

Nicolelis and his colleagues get around this problem by interweaving the sensory and motor signals. In a red-light, green-light pattern, the new brain-machine interface reads brain commands, and then switches over to sending tactile signals back to the brain for milliseconds at a time.

The technique "allows us to deliver these signals during a window of time in which we don't lose much or almost anything in terms of recording the motor signals the brain is generating," Nicolelis said. He and his colleagues reported their method online Wednesday (Oct. 5) in the journal Nature.

Monkeying around

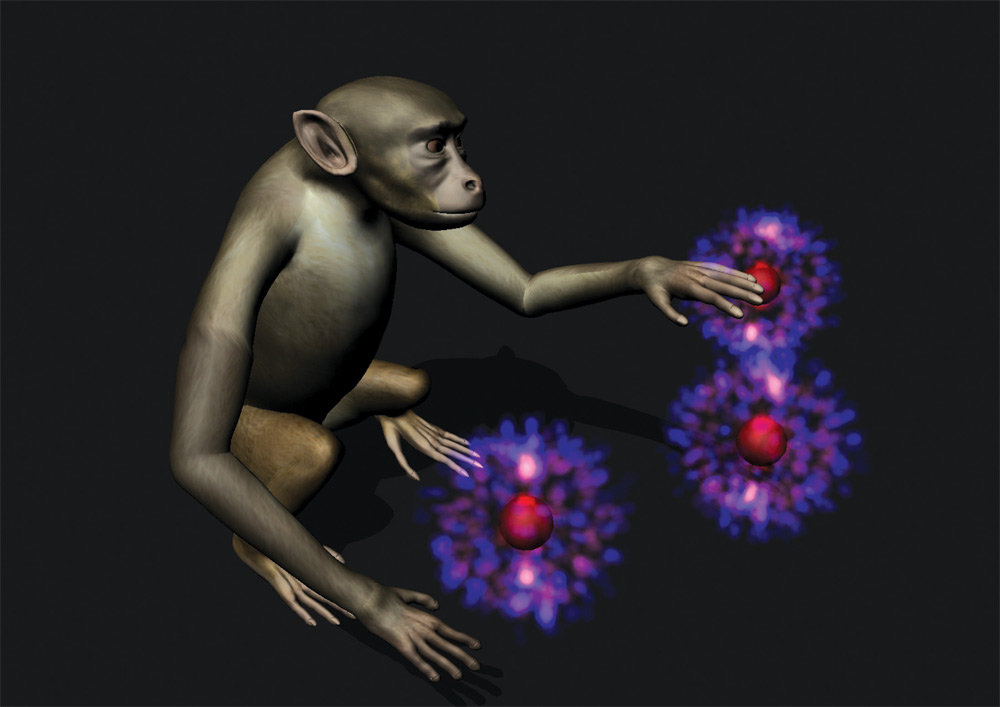

To test the method, Nicolelis and his colleagues implanted two rhesus monkeys with brain electrodes. One batch of electrodes went in the motor cortex of each monkey, the part of the brain that controls movement. Another batch went into the sensory areas of the monkeys' brains.

The researchers then trained the monkeys to look at a computer screen at three identical objects. The only difference between the three was that one object had a "virtual texture." The motor electrodes allowed the monkeys to move a virtual arm over the objects using only brain signals. If the monkey "touched" the textured object with the virtual arm, it would receive a signal to the sensory part of its brain.

The animals had to choose the correct textured object with the virtual arm; if they succeeded, they were rewarded with a squirt of fruit juice.

The monkeys were aces at the test, Nicolelis said, providing what he called "proof of principle" that electrodes can indeed send information to the sensory brain regions in near-real time. One monkey learned how to find the textured object within four trials, while the other took nine. As the trials went on, the monkeys got better and better, eventually getting almost as good at the brain-only task as they would have if they'd been using their real hands and arms.

"It was pretty quick," Nicolelis said. "Since we cannot talk to the monkeys, I assume with human patients, it's going to be much easier."

Intuitive feeling

Adding sensory feedback to motor action is a "key innovation," Bensmaia said. But more needs to be done to make sure the sensory signals actually make sense. In the monkey study, the signals stimulated one monkey's hand and the other monkey's leg, but there is no way to know how the animals experienced the sensation. To move a complex limb, Bensmaia said, the signals have to be as close as possible to what the original limb would have produced.

"There's this barrage of signals coming from the arm that can actually serve to confuse rather than assist in the control of the arm unless these signals are intuitive in some way," Bensmaia said. "That's the next major challenge."

Another challenge, Nicolelis said, is to record more neuron activity at once. The more neuron signals, the more control, he said. He and his colleagues are part of the international Walk Again Project, which aims to develop a full "exoskeleton" for paralyzed patients. The idea is that the exoskeleton, controlled by the brain, would replace a person's lost muscle control, allowing them to sit, stand and walk.

The goal, Nicolelis said, is to have the exoskeleton ready in three years — in time for the 2014 World Cup in his home nation of Brazil.

"We think we can do this in the next three years or so," Nicolelis said. "We are hoping that a teenager who was quadriplegic until then will be able to walk into the opening game and kick the opening ball of the World Cup."

You can follow LiveScience senior writer Stephanie Pappas on Twitter @sipappas. Follow LiveScience for the latest in science news and discoveries on Twitter @livescience and on Facebook.

Stephanie Pappas is a contributing writer for Live Science, covering topics ranging from geoscience to archaeology to the human brain and behavior. She was previously a senior writer for Live Science but is now a freelancer based in Denver, Colorado, and regularly contributes to Scientific American and The Monitor, the monthly magazine of the American Psychological Association. Stephanie received a bachelor's degree in psychology from the University of South Carolina and a graduate certificate in science communication from the University of California, Santa Cruz.