MIT's new AI can teach itself to control robots by watching the world through their eyes — it only needs a single camera

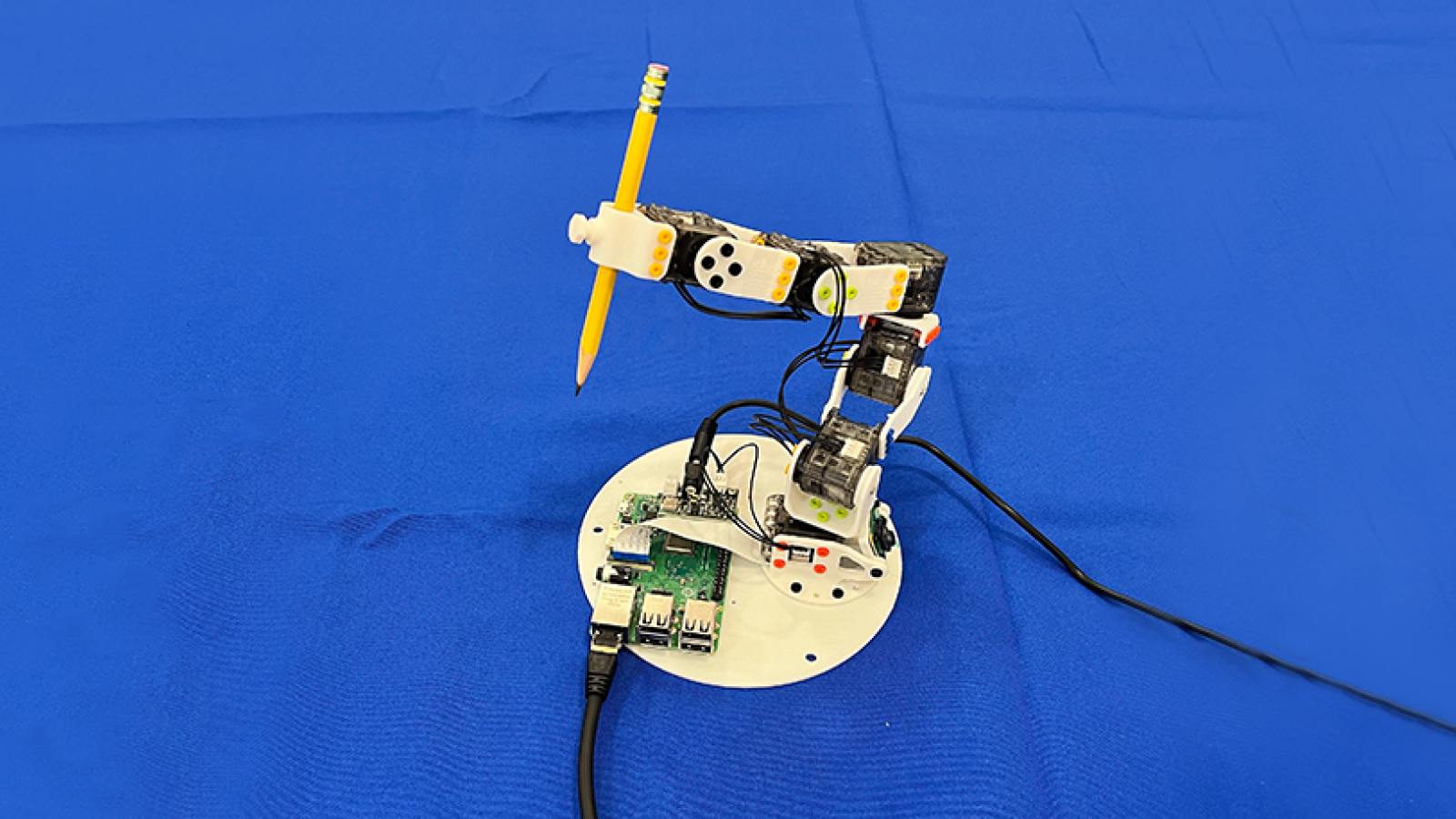

The new training method doesn't use sensors or onboard control tweaks, but a single camera that watches the robot's movements and uses visual data.

Get the world’s most fascinating discoveries delivered straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Join the club

Get full access to premium articles, exclusive features and a growing list of member rewards.

Scientists at MIT have developed a novel vision-based artificial intelligence (AI) system that can teach itself how to control virtually any robot without the use of sensors or pretraining.

The system gathers data about a given robot’s architecture using cameras, in much the same way that humans use their eyes to learn about themselves as they move.

This allows the AI controller to develop a self-learning model for operating any robot — essentially giving machines a humanlike sense of physical self-awareness.

Researchers achieved this breakthrough by creating a new control paradigm that uses cameras to map a video stream of a robot’s "visuomotor Jacobian field," a depiction of the machine’s visible 3D points, to the robot’s actuators.

The AI model can then predict precision-motor movements. This makes it possible to turn non-traditional robot architectures, such as soft robotics and those designed with flexible materials, into autonomous units with only a few hours of training.

"Think about how you learn to control your fingers: you wiggle, you observe, you adapt,” explained Sizhe Lester Li, a PhD student at MIT CSAIL and lead researcher on the project, in a press release. “That’s what our system does. It experiments with random actions and figures out which controls move which parts of the robot."

Related: Scientists burned, poked and sliced their way through new robotic skin that can 'feel everything'

Get the world’s most fascinating discoveries delivered straight to your inbox.

Typical robotics solutions rely on precision engineering to create machines to exact specifications that can be controlled using pre-trained systems. These can require expensive sensors and AI models developed with hundreds or thousands of hours of fine-tuning in order to anticipate every possible permutation of movement. Gripping objects with handlike appendages, for example, remains a difficult challenge in the arenas of both machine engineering and AI system control.

Understanding the world around you

Using the "Jacobian field" mapping camera solution, in contrast, provides a low-cost, high-fidelity solution to the challenge of automating robot systems.

The team published its findings June 25 in the journal Nature. In it, they said the work was designed to imitate the human brain’s method for learning to control machines.

Our ability to learn and reconstruct 3D configurations and predict motion as a function of control is derived from vision alone. According to the paper, “people can learn to pick and place objects within minutes” when controlling robots with a video game controller, and “the only sensors we require are our eyes.”

The system’s framework was developed using two to three hours of multi-view videos of a robot executing randomly generated commands captured by 12 consumer-grade RGB-D video cameras.

This framework is made up of two key components. The first is a deep-learning model that essentially allows the robot to determine where it and its appendages are in 3-dimensional space. This allows it to predict how its position will change as specific movement commands are executed. The second is a machine-learning program that translates generic movement commands into code a robot can understand and execute.

The team tested the new training and control paradigm by benchmarking its effectiveness against traditional camera-based control methods. The Jacobian field solution surpassed those existing 2D control systems in accuracy — especially when the team introduced visual occlusion that caused the older methods to enter a fail state. Machines using the team’s method, however, successfully created navigable 3D maps even when scenes were partially occluded with random clutter.

Once the scientists developed the framework, it was then applied to various robots with widely varying architectures. The end result was a control program that requires no further human intervention to train and operate robots using only a single video camera.

Tristan is a U.S-based science and technology journalist. He covers artificial intelligence (AI), theoretical physics, and cutting-edge technology stories.

His work has been published in numerous outlets including Mother Jones, The Stack, The Next Web, and Undark Magazine.

Prior to journalism, Tristan served in the US Navy for 10 years as a programmer and engineer. When he isn’t writing, he enjoys gaming with his wife and studying military history.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Live Science Plus

Live Science Plus