The human brain may be able to hold as much information in its memory as is contained on the entire Internet, new research suggests.

Researchers discovered that, unlike a classical computer that codes information as 0s and 1s, a brain cell uses 26 different ways to code its "bits." They calculated that the brain could store 1 petabyte (or a quadrillion bytes) of information.

"This is a real bombshell in the field of neuroscience," Terry Sejnowski, a biologist at the Salk Institute in La Jolla, California, said in a statement. "Our new measurements of the brain’s memory capacity increase conservative estimates by a factor of 10."

Amazing computer

What's more, the human brain can store this mind-boggling amount of information while sipping just enough power to run a dim light bulb. [Top 10 Mysteries of the Mind]

By contrast, a computer with the same memory and processing power would require 1 gigawatt of power, or "basically a whole nuclear power station to run one computer that does what our 'computer' does with 20 watts," said study co-author Tom Bartol, a neuroscientist at the Salk Institute.

In particular, the team wanted to take a closer look at the hippocampus, a brain region that plays a key role in learning and short-term memory.

Sign up for the Live Science daily newsletter now

Get the world’s most fascinating discoveries delivered straight to your inbox.

To untangle the mysteries of the mind, the research team took a teensy slice of a rat's hippocampus, placed it in embalming fluid, then sliced it thinly with an extremely sharp diamond knife, a process akin to "slicing an orange," Bartol said. (Though a rat's brain is not identical to a human brain, the basic anatomical features and function of synapses are very similar across all mammals.) The team then embedded the thin tissue into plastic, looked at it under a microscope and created digital images.

Next, researchers spent one year tracing, with pen and paper, every type of cell they saw. After all that effort, the team had traced all the cells in the sample, a staggeringly tiny volume of tissue. [Image Gallery: Einstein's Brain]

"You could fit 20 of these samples across the width of a single human hair," Bartol told Live Science.

Size distribution

Next, the team counted up all the complete neurons, or brain cells, in the tissue, which totaled 450. Of that number, 287 had the complete structures the researchers were interested in.

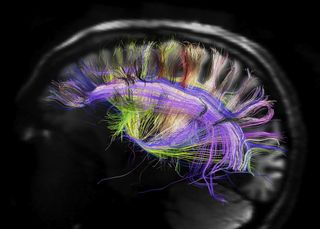

Neurons look a bit like swollen, misshapen balloons, with long tendrils called axons and dendrites snaking out from the cell body. Axons act as the brain cell's output wire, sending out a flurry of molecules called neurotransmitters, while tiny spines on dendrites receive the chemical messages sent by the axon across a narrow gap, called the synapse. (The specific spot on the dendrite at which these chemical messages are transmitted across the synapse is called the dendritic spine.) The receiving brain cell can then fire out its own cache of neurotransmitters to relay that message to other neurons, though most often, it does nothing in response.

Past work had shown that the biggest synapses dwarf the smallest ones by a factor of 60. That size difference reflects the strength of the underlying connection — while the average neuron relays incoming signals about 20 percent of the time, that percentage can increase over time. The more a brain circuit gets a workout (that is, the more one network of neurons is activated), the higher the odds are that one neuron in that circuit will fire when another sends it a signal. The process of strengthening these neural networks seems to enlarge the physical point of contact at the synapses, increasing the amount of neurotransmitters they can release, Bartol said.

If neurons are essentially chattering to each other across a synapse, then a brain cell communicating across a bigger synapse has a louder voice than one communicating across a smaller synapse, Bartol said.

But scientists haven't understood much about how many sizes of neurons there were and how they changed in response to signals.

Then Bartol, Sejnowski and their colleagues noticed something funny in their hippocampal slice. About 10 percent of the time, a single axon snaked out and connected to the same dendrite at two different dendritic spines. These oddball axons were sending exactly the same input to each of the spots on the dendrite, yet the sizes of the synapses, where axons "talk" to dendrites, varied by an average of 8 percent. That meant that the natural variance in how much a message between the two altered the underlying synapse was 8 percent.

So the team then asked: If synapses can differ in size by a factor of 60, and the size of a synapse varies by about 8 percent due to pure chance, how many different types of synaptic sizes could fit within that size range and be detected as different by the brain?

By combining that data with signal-detection theory, which dictates how different two signals must be before the brain can detect a difference between them, the researchers found that neurons could come in 26 different size ranges. This, in essence, revealed how many different volumes of "voices" neurons use to chatter with each other. Previously, researchers thought that these brain cells came in just a few sizes.

From there, they could calculate exactly how much information could be transmitted between any two neurons. Computers store data as bits, which can have two potential values — 0 or 1. But that binary message from a neuron (to fire or not) can produce 26 different sizes of neurons. So they used basic information theory to calculate just how many bits of data each neuron can hold.

"To convert the number 26 into units of bits we simply say 2 raised to the n power equals 26 and solve for n. In this case n equals 4.7 bits," Bartol said.

That storage capacity translates to about 10 times what was previously believed, the researchers reported online in the journal eLife.

Incredibly efficient

The new findings also shed light on how the brain stores information while remaining fairly active. The fact that most neurons don't fire in response to incoming signals, but the body is highly precise in translating those signals into the physical structures, explains in part why the brain is more efficient than a computer: Most of its heavy lifters are not doing anything most of the time.

However, even if the average brain cell is inactive 80 percent of the time, that still doesn't explain why a computer requires 50 million times more energy to do the same tasks as a human brain.

"The other part of the story might have to do with how biochemistry works compared to how electrons work in a computer. Computers are using electrons to do the calculations and electrons flowing in a wire make a lot of heat, and that heat is wasted energy," Bartol said. Biochemical pathways may simply be much more efficient, he added.

Follow Tia Ghose on Twitterand Google+. Follow Live Science @livescience, Facebook & Google+. Original article on Live Science.

Tia is the managing editor and was previously a senior writer for Live Science. Her work has appeared in Scientific American, Wired.com and other outlets. She holds a master's degree in bioengineering from the University of Washington, a graduate certificate in science writing from UC Santa Cruz and a bachelor's degree in mechanical engineering from the University of Texas at Austin. Tia was part of a team at the Milwaukee Journal Sentinel that published the Empty Cradles series on preterm births, which won multiple awards, including the 2012 Casey Medal for Meritorious Journalism.

Most Popular