IBM will build monster 10,000-qubit quantum computer by 2029 after 'solving science' behind fault tolerance — the biggest bottleneck to scaling up

The quantum computer, called Starling, will use 200 logical qubits — and IBM plans to follow this up with a 2,000-logical-qubit machine in 2033

IBM scientists say they have solved the biggest bottleneck in quantum computing and plan to launch the world's first large-scale, fault-tolerant machine by 2029.

The new research demonstrates new error-correction techniques that the scientists say will lead to a system 20,000 times more powerful than any quantum computer in existence today.

In two new studies uploaded June 2 and June 3 to the preprint arXiv server, the researchers revealed new error mitigation and correction techniques that sufficiently handle these errors and allow for the scaling of hardware nine times more efficiently than previously possible.

The new system, called "Starling," will use 200 logical qubits — made up of roughly 10,000 physical qubits. This will be followed by a machine called "Blue Jay," which will use 2,000 logical qubits, in 2033.

The new research, which has not yet been peer-reviewed, describes IBM's quantum low-density parity check (LDPC) codes — a novel fault-tolerance paradigm that researchers say will allow quantum computer hardware to scale beyond previous limitations.

"The science has been solved" for expanded fault-tolerant quantum computing, Jay Gambetta, IBM vice president of quantum operations, told Live Science. This means that scaling up quantum computers is now just an engineering challenge, rather than a scientific hurdle, Gambetta added.

Get the world’s most fascinating discoveries delivered straight to your inbox.

While quantum computers exist today, they're only capable of outpacing classical computer systems (those using binary calculations) on bespoke problems that are designed only to test their potential.

One of the largest hurdles to quantum supremacy, or quantum advantage, has been in scaling up quantum processing units (QPUs).

As scientists add more qubits to processors, the errors in calculations performed by QPUs add up. This is because qubits are inherently "noisy" and errors occur more frequently than in classical bits. For this reason, research in the field has largely centered on quantum error-correction (QEC).

The road to fault tolerance

Error correction is a foundational challenge for all computing systems. In classical computers, binary bits can accidentally flip from a one to a zero and vice versa. These errors can compound and render calculations incomplete or cause them to fail entirely.

The qubits used to conduct quantum calculations are far more susceptible to errors than their classical counterparts due to the added complexity of quantum mechanics. Unlike binary bits, qubits carry extra "phase information."

While this enables them to perform computations using quantum information, it also makes the task of error correction much more difficult.

Until now, scientists were unsure exactly how to scale quantum computers from the few hundred qubits used by today's models to the hundreds of millions they theoretically need to make them generally useful.

But the development of LDPC and its successful application across existing systems is the catalyst for change, Gambetta said.

LDPC codes use a set of checks to detect and correct errors. This results in individual qubits being involved in fewer checks and each check involving fewer qubits than previous paradigms.

The key advantage of this approach is a significantly improved "encoding rate," which is the ratio of logical qubits to the physical qubits needed to protect them. By using LDPC codes, IBM aims to dramatically reduce the number of physical qubits required to scale up systems.

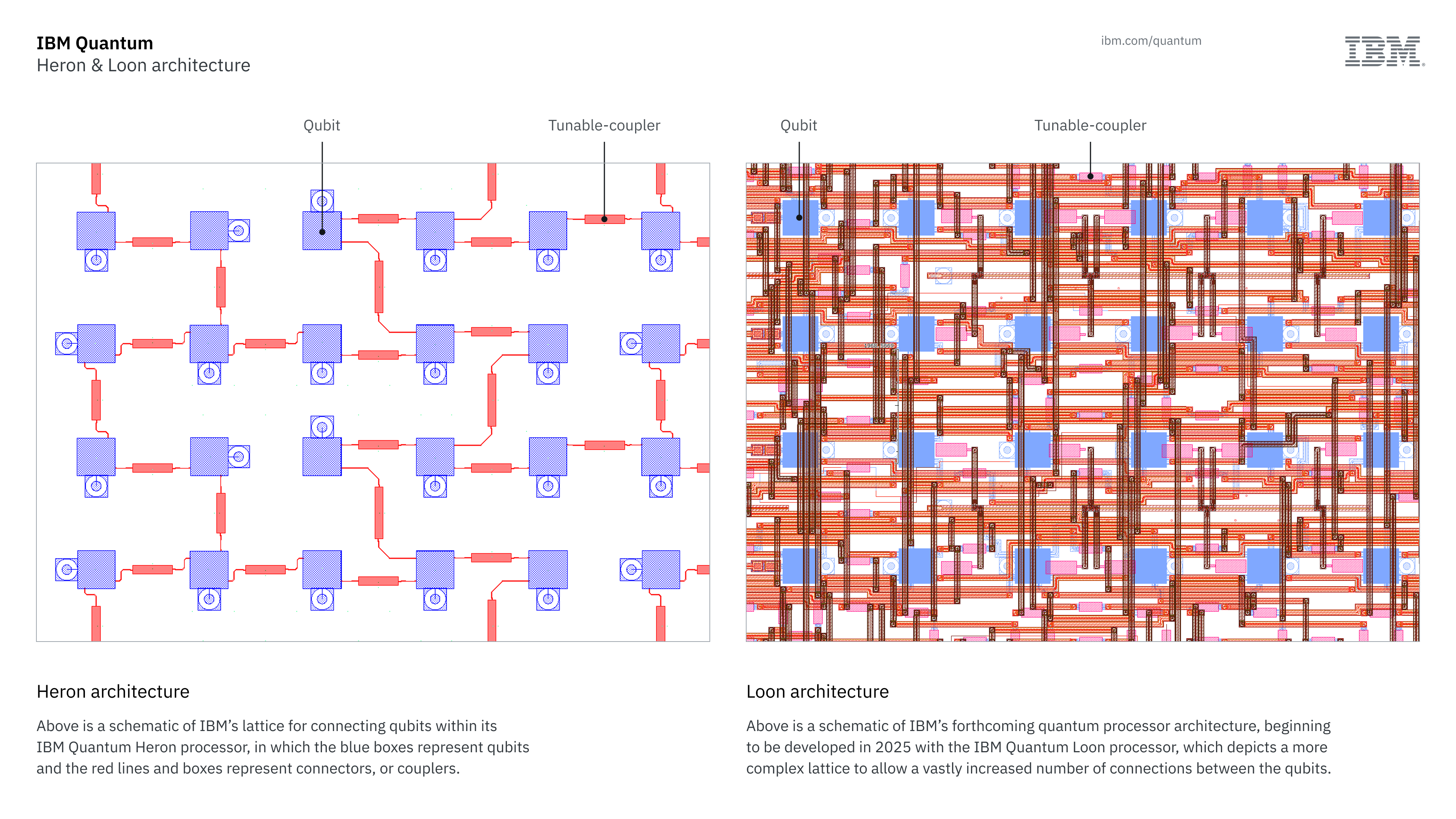

The new method is about 90% faster at conducting error-mitigation than all previous techniques, based on IBM research. IBM will incorporate this technology into its Loon QPU architecture, which is the successor to the Heron architecture used by its current quantum computers.

Moving from error-mitigation to error-correction

Starling is expected to be capable of 100 million quantum operations using 200 logical qubits. IBM representatives said this was roughly equivalent to 10,000 physical qubits. Blue Jay will theoretically be capable of 1 billion quantum operations using its 2,000 logical qubits.

Current models have about 5,000 gates (analogous to 5,000 quantum operations) using 156 logical qubits. The leap from 5,000 operations to 100 million will only be possible through technologies like LDPC, IBM representatives said in a statement. Other technologies, including those used by companies like Google, will not scale to the larger sizes needed to reach fault tolerance, they added.

To take full advantage of Starling in 2029 and Blue Jay in 2033, IBM needs algorithms and programs built for quantum computers, Gambetta said. To help researchers prepare for future systems, IBM recently launched Qiskit 2.0, an open-source development kit for running quantum circuits using IBM's hardware.

"The goal is to move from error mitigation to error correction," Blake Johnson, IBM's quantum engine lead, told Live Science, adding that "quantum computing has grown from a field where researchers are exploring a playground of quantum hardware to a place where we have these utility-scale quantum computing tools available."

Tristan is a U.S-based science and technology journalist. He covers artificial intelligence (AI), theoretical physics, and cutting-edge technology stories.

His work has been published in numerous outlets including Mother Jones, The Stack, The Next Web, and Undark Magazine.

Prior to journalism, Tristan served in the US Navy for 10 years as a programmer and engineer. When he isn’t writing, he enjoys gaming with his wife and studying military history.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Live Science Plus

Live Science Plus