Beyond Terminator: Robots Deserve Ethical Treatment Too (Op-Ed)

This article was originally published at The Conversation. The publication contributed the article to LiveScience's Expert Voices: Op-Ed & Insights.

Dear robots: You have an image problem.

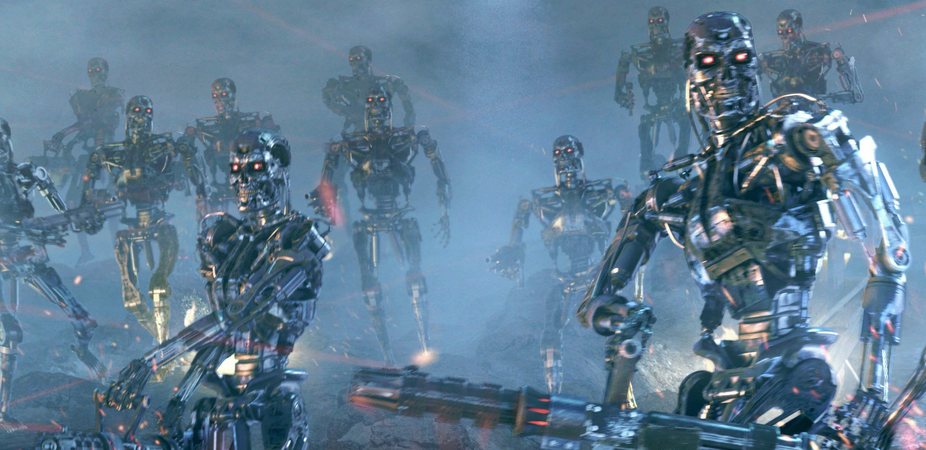

Typically cast as either as vicious killing machines, cutesy pets or house slaves, robots are usually depicted in Western media as either something to be feared or something to be used.

More and more of our lives involve the use of intelligent systems in both industrial and domestic environments. We have robot vacuums, robots making pancakes, robot cars, and drones.

So what is our relationship to robots and how do our attitudes toward them – whether that’s a pervading fear of the singularity or a fond affection – affect the sort of robots we create?

In my digital writing residency at The Cube (the Queensland University of Technology's (QUT) digital interactive learning and display space) I’ve imagined a scenario in which artificial systems are another species. Like an animal, or a human. We therefore have a responsibility to facilitate ethical and mutually-beneficial relations between human and robots. What is stopping us from doing that?

The digital installation – which involves 3D robots on large screens we can interact with – aims to reveal people's biases against robots and provoke conversation about what that means.

Get the world’s most fascinating discoveries delivered straight to your inbox.

Considering robots have been a colourful feature in fiction since (arguably) 1818 when Mary Shelley's Frankenstein was first published, the relationship between robots and fiction has been a constant one.

I’m using fiction – in this case, an interactive installation – to change people's views of robots, or at least reflect their own views.

But human-robot interaction researchers have found other uses for fiction. In one recent study, researchers from the Ars Electronic Futurelab, University of Linz and Osaka University found that narrative persuasion – in other words, storytelling techniques – plays a significant role in acceptance of new robotic agents.

They introduced a robot in three different ways: with a short story about the robot’s imagined past; with a non-narrative description of it; with no description at all.

For participants who were introduced to the robot through a story about it, the intent to adopt the robot and its perceived usefulness was significantly higher than the two other non-narrative introduction methods. The researchers argued that using narrative persuasion, therefore, can aid in robot acceptance.

Do robots need to be accepted? In a narrative-based study into children’s expectations of robots and learning called Robots@School, the LEGO Learning Institute, Latitude and Project Synthesis found that children don’t have negative views of robots.

They asked children to imagine robots were a fixture in their school or home, and to write a story about that and create a picture to go with it. The children imagined robots that did their homework with them, encouraged them to learn, and played sport with them.

The study found that kids tend to think of technology as fundamentally human, as opposed to many adults who think about technology as separate from humanness.

While there’s an undeniably positive attitude towards robots from kids, it is based on the assumption that the robot will fulfil the child’s every desire. If the robot didn’t like football, for instance, or maths, then it wouldn’t fulfil its function as a tool or a caregiver.

QUT Robotics Lab researcher Dr Feras Dayoub has argued that robots should be feared. It is risky, he says, to trust robots. They are dangerous tools that can do harm.

Similarly, in her thesis The Quiet Professional: An Investigation of U.S. Military Explosive Ordnance Disposal Personnel Interactions With Everyday Field Robots, Julie Carpenter of the University of Washington investigated how soldiers interact with bomb-disposal robots.

She found they attached themselves to the robots, putting on funerals for them when they’re destroyed, for instance. But while she found no evidence that an emotional attachment has interfered with their work, she argues that robots should be designed to discourage emotional attachment.

There is an underlying narrative I see emerging: having an affection for something will corrupt the design of and interactions with robots. If you care for something or if you like something, then your work will be negatively affected. You will be slow to see when harm is being done, you won’t put the robot in harm’s way even if that’s its job, or you won’t design it properly.

As a writer and designer, I think the opposite. I have a strong affection for my characters, but I still put them in harm's way in every story. I feel terrible about it, but I do it anyway. As an adult, it's also the complete opposite of how I operate. Affection for something or someone doesn’t destroy my sensibilities.

After a public debate I was involved in recently, I asked the room who would like to see robots become self-aware or independent. The majority of the room did not want robots to become self-aware or independent because it meant robots would experience the pain that humans do; that robots could inflict harm upon humans; and that humans have enough troubles dealing with each other.

An argument for robot awareness was that this would represent our evolution as humans: that we could create sentient technology. It is progress, where we need to go.

All of these discussions starkly reveal how we as humans view ourselves. "A dystopian robot future in which metal destroys flesh” is the narrative of people who don't see much hope for humans, with or without robots. “A utopian robot future where robots live harmoniously alongside humans" is the narrative of people who hope to create a better kind of humanity.

Either way, these are narratives of what something outside of ourselves means. This "other" represents the worst of us or will be better than us. It is never us as we are now, because that would be a terrible waste of creation.

Christy Dena has received grants from the Australia Council for the Arts and QUT The Cube for the "Robot University" installation.

This article was originally published at The Conversation. Read the original article. The views expressed are those of the author and do not necessarily reflect the views of the publisher. This version of the article was originally published on LiveScience.