Human-like robot tricks people into thinking it has a mind of its own

It was actually being controlled by researchers.

Get the world’s most fascinating discoveries delivered straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Delivered Daily

Daily Newsletter

Sign up for the latest discoveries, groundbreaking research and fascinating breakthroughs that impact you and the wider world direct to your inbox.

Once a week

Life's Little Mysteries

Feed your curiosity with an exclusive mystery every week, solved with science and delivered direct to your inbox before it's seen anywhere else.

Once a week

How It Works

Sign up to our free science & technology newsletter for your weekly fix of fascinating articles, quick quizzes, amazing images, and more

Delivered daily

Space.com Newsletter

Breaking space news, the latest updates on rocket launches, skywatching events and more!

Once a month

Watch This Space

Sign up to our monthly entertainment newsletter to keep up with all our coverage of the latest sci-fi and space movies, tv shows, games and books.

Once a week

Night Sky This Week

Discover this week's must-see night sky events, moon phases, and stunning astrophotos. Sign up for our skywatching newsletter and explore the universe with us!

Join the club

Get full access to premium articles, exclusive features and a growing list of member rewards.

An uncannily human-like robot that had been programmed to socially interact with human companions tricked people into thinking that the mindless machine was self-aware, according to a new study.

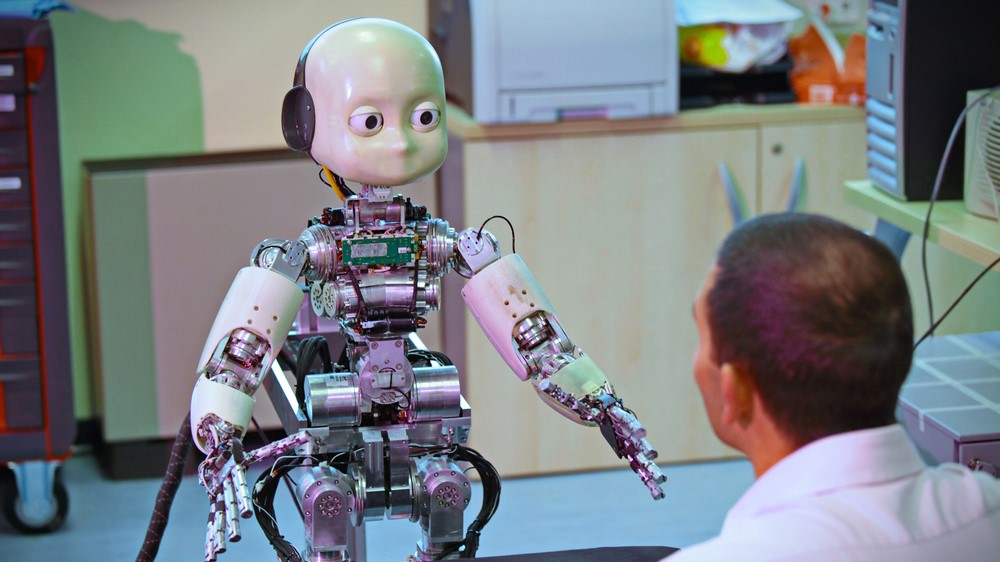

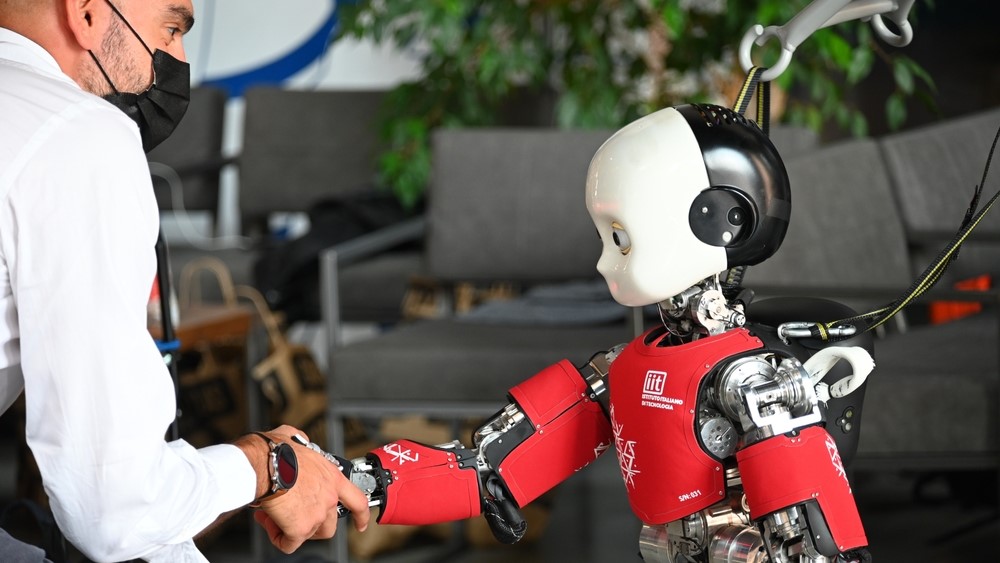

The digital deceiver, which the researchers dubbed "iCub," is a child-size humanoid robot created by the Italian Institute of Technology (IIT) in Genoa to study social interactions between humans and robots. This advanced android, which stands at 3.6 feet (1.1 meters) tall, has a humanlike face, camera eyes that can maintain eye contact with people and 53 degrees of freedom that allow it to complete complex tasks and mimic human behaviors. Researchers can program iCub to act remarkably humanlike, as demonstrated in its 2016 appearance on Italy's Got Talent when the robot performed Tai Chi moves and wowed the judges with its clever conversational skills.

In the new study, researchers programmed iCub to interact with human participants as they watched a series of short videos. During some of the experiments, iCub was programmed to behave in a human-like manner: greeting participants as they entered the room, and reacting to videos with vocalizations of joy, surprise and awe. But in other trials, the robot's programming directed it to behave more like a machine, ignoring nearby humans and making stereotypically robotic beeping sounds.

The researchers found that people who were exposed to the more human-like version of iCub were more inclined to view it with a perspective known as "the intentional stance," meaning they believed that the robot had its own thoughts and desires, while those who were exposed to the less human version of the robot did not. The researchers had expected that this would happen, but were "very surprised" by how well it worked, lead study author Serena Marchesi, a researcher with the Social Cognition in Human-Robot Interaction unit at IIT, and study senior author Agnieszka Wykowska, the head of the Social Cognition in Human-Robot Interaction unit, told Live Science in a joint email.

Related: Human-like robot creates creepy self-portraits

The iCub robot does have a limited capacity to "learn" like a neural network (a type of artificial intelligence, or AI, that mimics the processes of a human brain), but is far from being self-aware, the researchers said.

Altering behaviors

In each of the experiments, a single human participant sat in a room with iCub and watched three short two-minute video clips of animals. The research team decided to use video-watching as the shared task because it is a common activity among friends and family, and they used footage that featured animals and "did not include a human or a robot character" in order to avoid any biases, the researchers said.

Get the world’s most fascinating discoveries delivered straight to your inbox.

In the first set of experiments, iCub had been programmed to greet the human participants, introducing itself and asking for their names as they entered. During these interactions, iCub also moved its camera "eyes" to maintain eye contact with the human subjects. Throughout the video-watching activity, it continued to act in a human-like way, vocalizing responsively as people do. "It laughed when there was a funny scene in the movie or behaved as if it was in awe with a beautiful visual scene," the researchers said.

In the second set of experiments, iCub did not interact with participants, and while watching the videos its only reaction to the scenes was to make machine-like noises, including "beeping sounds like a car sensor would do when approaching an obstacle," the researchers said. During these experiments, the cameras in iCub's eyes were also disabled, so the robot could not maintain eye contact.

Intentional vs mechanistic

Before and after the experiments, the researchers made participants complete the InStance Test (IST). Designed by the research team in 2019, this survey is used to gauge people's opinions of the robot's mental state.

Using the IST, the study authors assessed participant's reactions to 34 different scenarios. "Each scenario consists of a series of three pictures depicting the robot in daily activities," the researchers said. "Participants then choose between two sentences describing the scenario." One sentence used intentional language that hinted at an emotional state (for example: "iCub wants") and the other sentence used mechanistic language that focused on actions ("iCub does"). In one scenario when participants were shown a series of pictures where iCub selects one of several tools from a table, they chose between statements that said the robot "grasped the closest object" (mechanical) or "was fascinated by tool use" (intentional).

The team found that if participants were exposed to iCub's human-like behaviors in the experiments, they were more likely to switch from a mechanistic stance to an intentional stance in their survey responses, hinting that iCub's human-like behavior had changed the way they perceived the robot. By comparison, participants that interacted with the more robotic version of iCub firmly maintained a mechanistic stance in the second survey. This suggests that people need to see evidence of relatable behavior from a robot in order to perceive it as human-like, the researchers said.

Next steps

These findings show that humans can form social connections with robots, according to the study. This could have implications for the use of robots in healthcare, especially for elderly patients, the researchers said. However, there is still much to learn about human-robot interactions and social bonding, the scientists cautioned.

One of the big questions the team wants to answer is if people can bond with robots that do not look human, but still display human-like behaviors. "It is difficult to foresee how a robot with a less human-like appearance would elicit the same level of like-me experience," the researchers said. In the future, they hope to repeat the study's experiments with robots of different shapes and sizes, they added.

The researchers also argue that in order for humans to form lasting social bonds with robots, people must let go of preconceived notions about sentient machines that are popular fear-mongering fodder in science fiction.

"Humans have a tendency to be afraid of the unknown," the researchers said. "But robots are just machines and they are far less capable than their fictional depictions in popular culture." To help people overcome this bias, scientists can better educate the public on what robots can do — and what they can't. After that, "the machines will become immediately less scary," they said.

The study was published online July 7 in the journal Technology, Mind and Behavior.

Originally published on Live Science.

Harry is a U.K.-based senior staff writer at Live Science. He studied marine biology at the University of Exeter before training to become a journalist. He covers a wide range of topics including space exploration, planetary science, space weather, climate change, animal behavior and paleontology. His recent work on the solar maximum won "best space submission" at the 2024 Aerospace Media Awards and was shortlisted in the "top scoop" category at the NCTJ Awards for Excellence in 2023. He also writes Live Science's weekly Earth from space series.

Live Science Plus

Live Science Plus