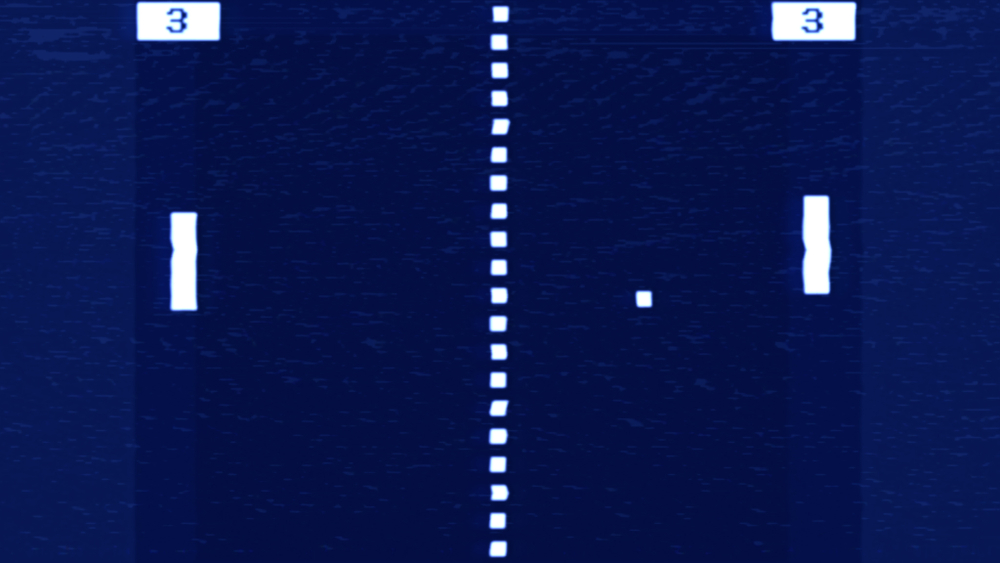

Minibrains grown from human and mouse neurons learn to play Pong

Researchers taught a synthetic neuron network to play a version of the retro arcade game "Pong" by integrating the brain cells into an electrode array controlled by a computer program.

Get the world’s most fascinating discoveries delivered straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Delivered Daily

Daily Newsletter

Sign up for the latest discoveries, groundbreaking research and fascinating breakthroughs that impact you and the wider world direct to your inbox.

Once a week

Life's Little Mysteries

Feed your curiosity with an exclusive mystery every week, solved with science and delivered direct to your inbox before it's seen anywhere else.

Once a week

How It Works

Sign up to our free science & technology newsletter for your weekly fix of fascinating articles, quick quizzes, amazing images, and more

Delivered daily

Space.com Newsletter

Breaking space news, the latest updates on rocket launches, skywatching events and more!

Once a month

Watch This Space

Sign up to our monthly entertainment newsletter to keep up with all our coverage of the latest sci-fi and space movies, tv shows, games and books.

Once a week

Night Sky This Week

Discover this week's must-see night sky events, moon phases, and stunning astrophotos. Sign up for our skywatching newsletter and explore the universe with us!

Join the club

Get full access to premium articles, exclusive features and a growing list of member rewards.

A synthetic minibrain made out of human and mouse neurons has successfully learned to play the video game "Pong" after researchers hooked it up to a computer-controlled electrode array. It is the first time that brain cells isolated from an organism have completed a task like this, suggesting that such learning ability is not limited to fully intact brains locked inside animals' skulls.

In the new study, researchers grew a synthetic neuron network on top of rows of electrodes housed inside a tiny container, which they called DishBrain. A computer program sent electrical signals that activated specific regions of neurons. These signals told the neurons to "play" the retro video game "Pong," which involves hitting a moving dot, or "ball", with a small line, or "paddle," in 2D. The researcher's computer program then channeled performance data back to the neurons via electrical signals, which informed the cells of whether they had hit or missed the ball.

The researchers found that, within just five minutes, the neurons had already started altering the way they moved the paddle to increase how often they hit the ball. This is the first time that a man-made biological neural network has been taught to independently complete a goal-oriented task, the researchers wrote in a new paper published Oct. 12 in the journal Neuron.

Related: How does the brain store memories?

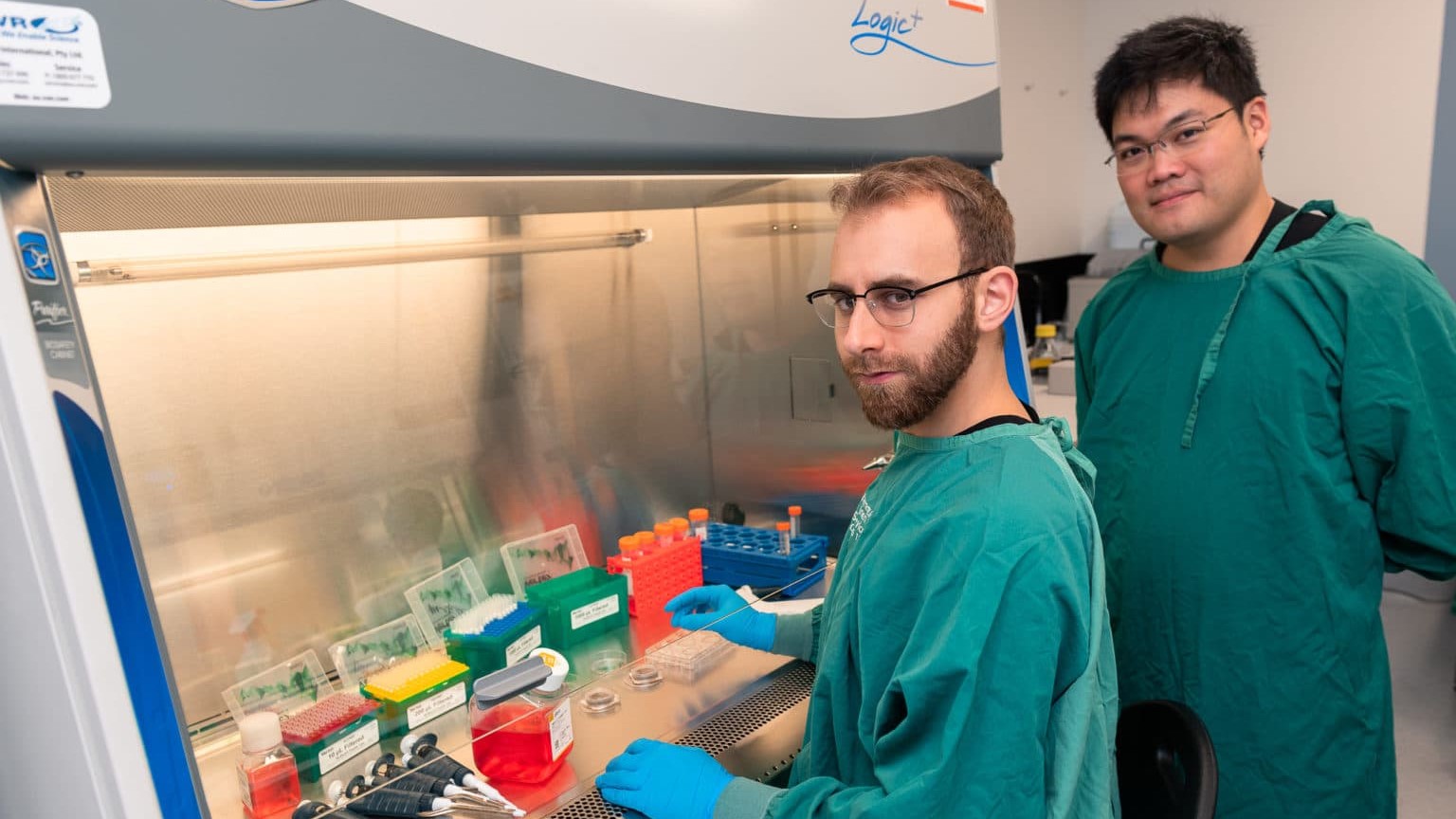

The new study is the first to "explicitly seek, create, test and leverage synthetic biological intelligence," study lead author Brett Kagan, chief scientific officer at Cortical Labs, a private company in Melbourne, Australia, told Live Science. The researchers hope their work could be the springboard for a whole new area of research.

Minibrains

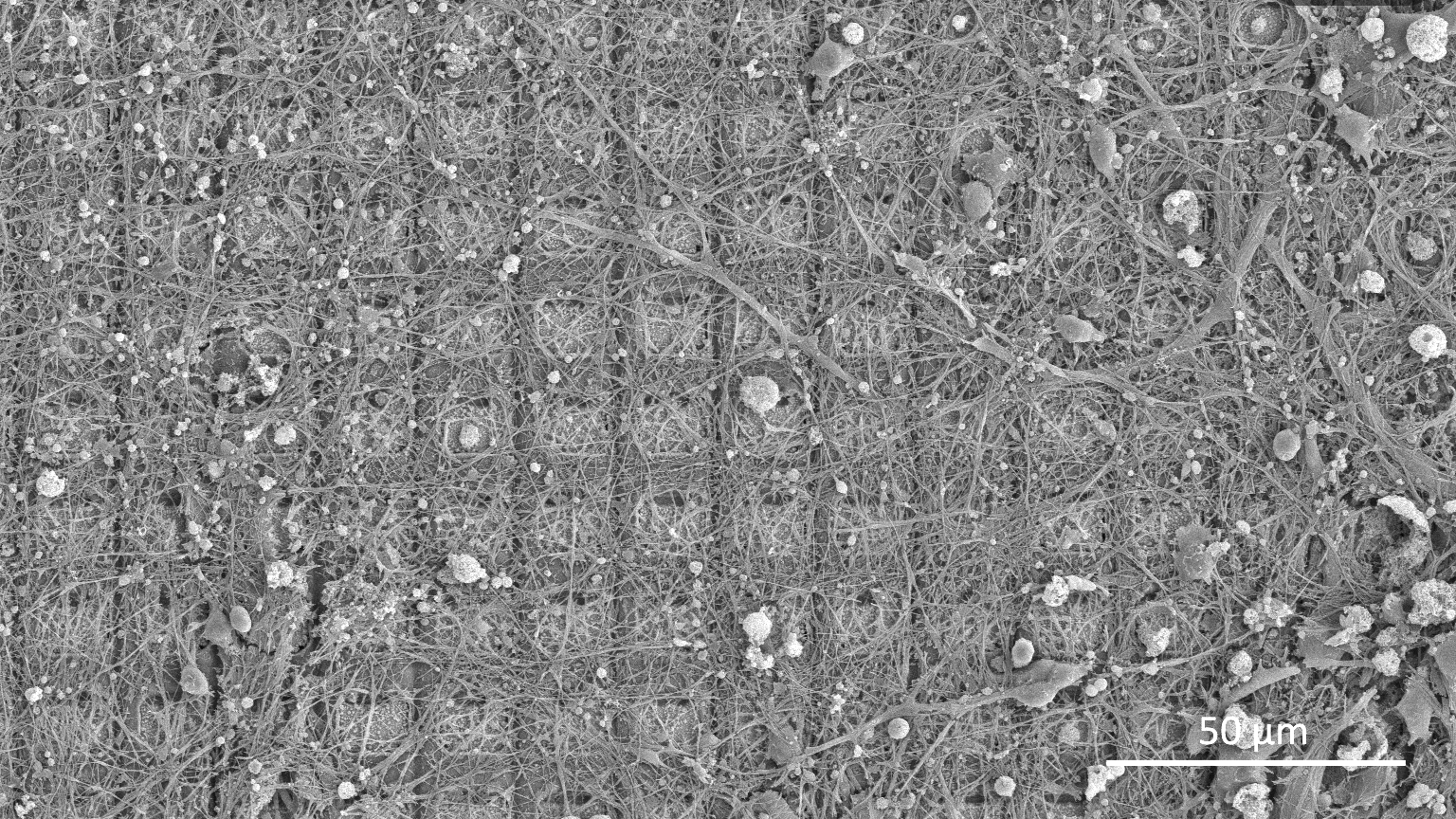

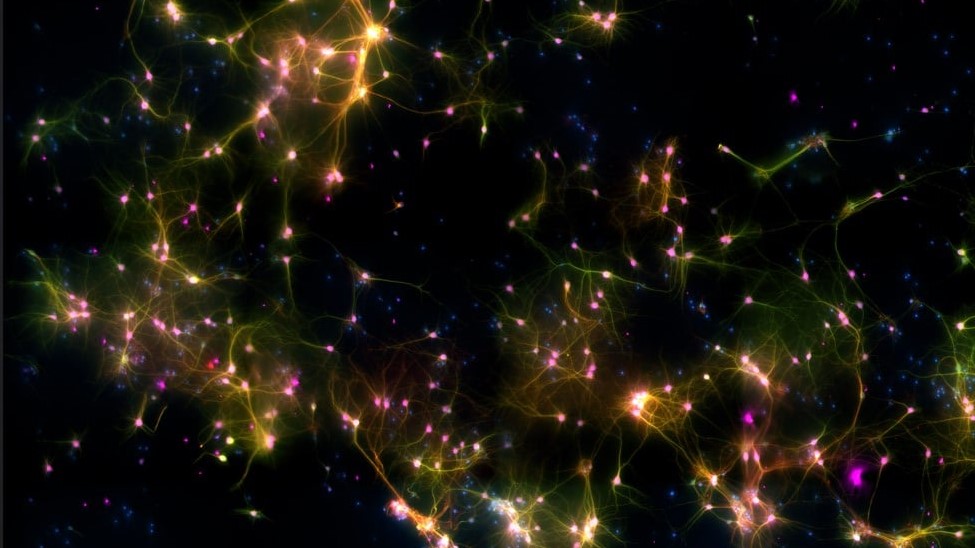

The DishBrain hardware, which was developed by Cortical Labs, consists of a small circular container, around 2 inches (5 centimeters) wide, that is lined with an array containing 1,024 active electrodes that can both send and receive electrical signals. Researchers introduced a mix of human and mouse neurons on top of these electrodes. The neurons were coaxed by researchers into growing new connections and pathways until they transformed into a complex web of brain cells that completely covered the electrodes.

The mouse cells were grown in culture from tiny neurons extracted from developing embryos. The human neurons were created using pluripotent stem cells — blank cells capable of turning into any other cell type — that were derived from blood and skin cells donated by volunteers.

Get the world’s most fascinating discoveries delivered straight to your inbox.

In total, the neural network contained around 800,000 neurons, Kagan said. For context, this is around the same number of neurons as there are in a bee's brain, he added. Although the synthetic neural network was similar in size to the brains of small invertebrates, its simple 2D structure is much more basic than living brains and therefore has slightly reduced computing power compared to living brains, Kagan said.

Playing the game

During the experiments, researchers used a novel computer program, known as DishServer, combined with the electrodes inside DishBrain to create a "virtual game world" that the neurons could play "Pong" within, Kagan said. This may sound high-tech, but in reality, it isn't much different from playing a video game on a TV.

Using this analogy, the electrode array can be thought of like the TV screen, with each individual electrode representing a pixel on the screen; the computer program can be thought of like the game disk that provides the code to play the game; the neuron-electrode interface within DishBrain can be thought of like the game console and controllers that facilitate the game; and the neurons can be thought of as the person playing the game.

When the computer program activates a particular electrode, that electrode generates an electrical signal that the neurons can interpret, similar to how a pixel on a screen lights up and becomes visible to a person playing a game. By activating multiple electrodes in a pattern, the program can create a shape, in this case a ball, that moves across the array or "TV screen."

A separate section of the array monitors the electrical signals given off by the neurons in response to the "ball" signals. These neuron signals can then be interpreted by the computer program and used to maneuver the paddle in the virtual game world. This region of the neuron-electrode interface can be thought of as the game controller.

If the neuronal signals mirror those that move the ball then the paddle will hit the ball. But if the signals do not match up it will miss. The computer program issues a second feedback signal to the controlling neurons to tell them if they have hit the ball or not.

Teaching neurons

The secondary feedback signal can be thought of as a reward system that the computer program uses to teach the neurons to get better at hitting the ball.

Without the reward system, it would be very hard to reinforce desirable behavior, such as hitting the ball, and discourage unfavorable behavior, like missing the ball. Left to their own devices, the neurons in DishBrain would randomly move the paddle without any consideration of where the ball is because it makes no difference to the neurons if they hit the ball or not.

To get around this problem the researchers turned to a theory known as the free energy principle, "which proposes that cells at this level try to minimize the unpredictability in their environment," study co-author Karl Friston, a theoretical neuroscientist at University College London in the U.K., said in a statement. Friston was the first researcher to put forward the idea for the free energy principle in a 2009 paper published in the journal Trends in Cognitive Science.

In a sense, "the neurons are trying to create a predictable model of the world," Kagan told Live Science. This is where the secondary feedback signal, which tells the neurons whether they have hit or missed the ball, comes into play.

When the neurons have successfully hit the ball, the feedback signal is delivered at a similar voltage and location to the signals used by the computer to move the ball. But when the neurons have missed the ball, the feedback signal strikes at a random voltage and multiple locations. Per the free energy principle, the neurons want to minimize the amount of random signals they are receiving, so they start to change how they move the "paddle" in relation to the "ball."

Within five minutes of receiving this feedback, the neurons were increasing how often they hit the ball. After 20 minutes, the neurons were able string together short rallies where they continually hit the ball as it bounced off the "walls" in the game. You can see how quickly the neurons progressed in this online simulation.

Harry is a U.K.-based senior staff writer at Live Science. He studied marine biology at the University of Exeter before training to become a journalist. He covers a wide range of topics including space exploration, planetary science, space weather, climate change, animal behavior and paleontology. His recent work on the solar maximum won "best space submission" at the 2024 Aerospace Media Awards and was shortlisted in the "top scoop" category at the NCTJ Awards for Excellence in 2023. He also writes Live Science's weekly Earth from space series.

Live Science Plus

Live Science Plus