In Images: Machines Trained to Read Minds

Machines that can read people's minds are getting closer to reality

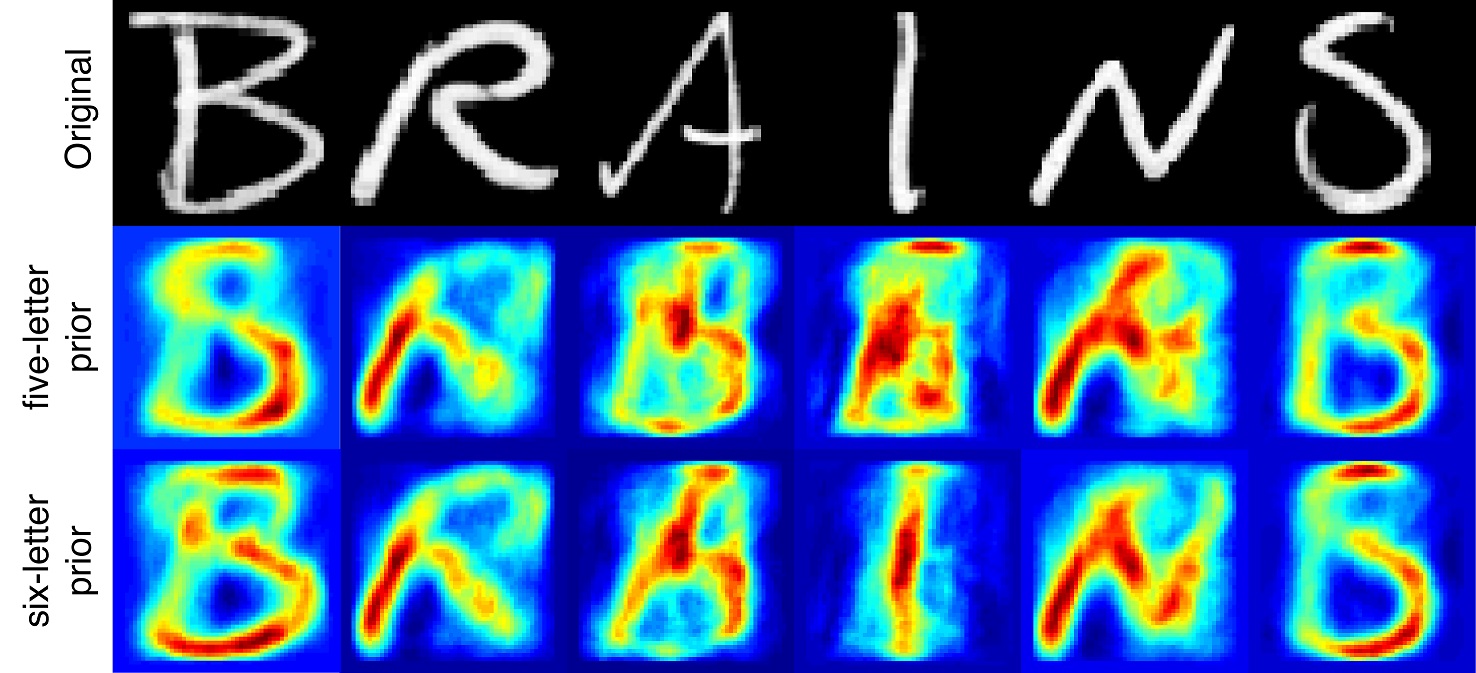

Scientists have revealed they can now use brain scans to read letters participants are viewing.In a study published July 22 in the journal NeuroImage, the researchers used functional magnetic resonance imaging (fMRI) to record the activity of the visual cortex, the brain region that processes visual information, while study participants were viewing a series of handwritten characters: B, R, A, I, N and S.By feeding parts of this data into mathematical models over and over again, the researchers were able to "teach" the machine which pattern of activity corresponded with which letter a person was viewing. The trained model was then tested on the rest of the data — that is, it had to reconstruct the letters from the activity of the brain. Here are the results:

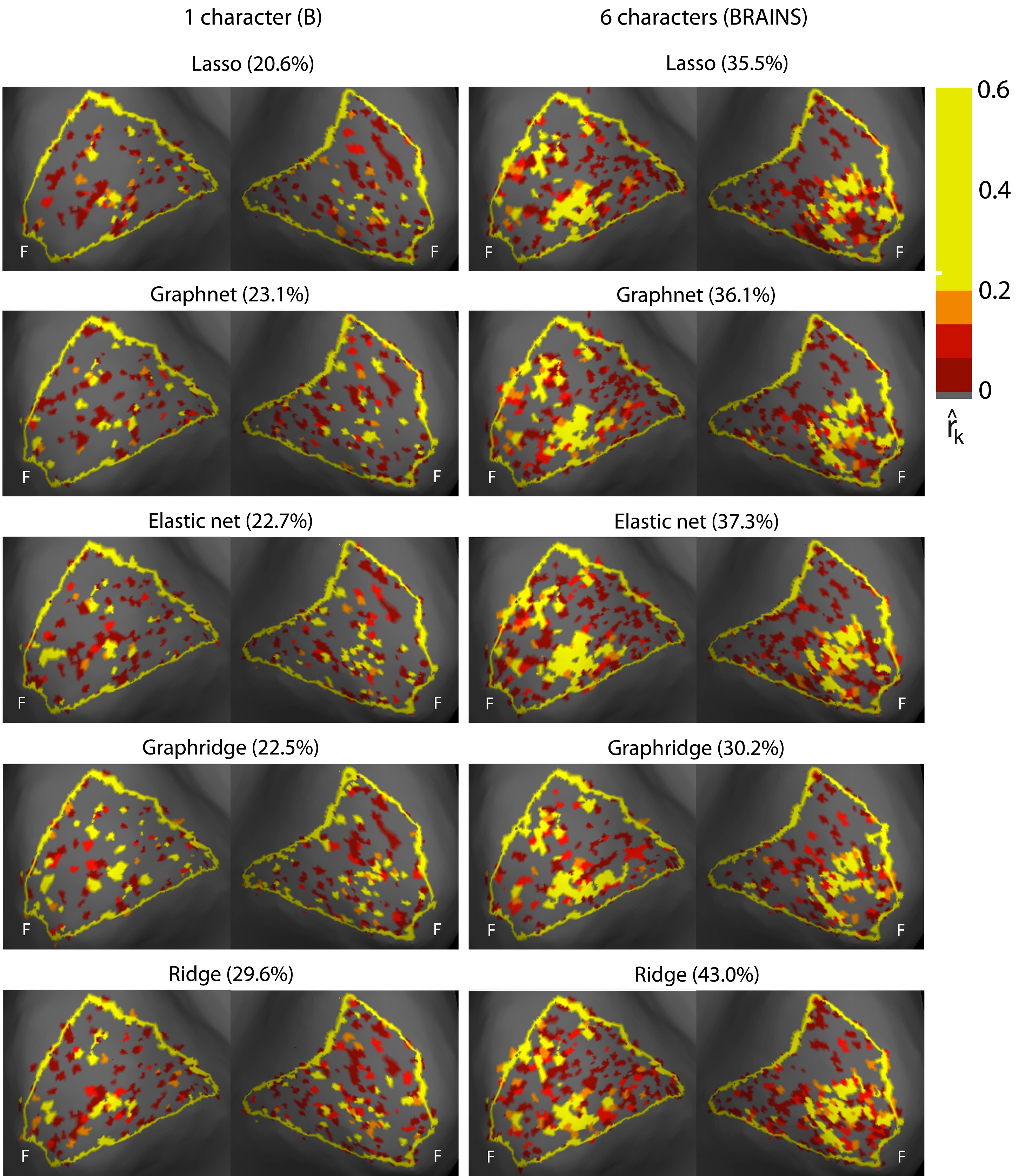

Brain activity when seeing images

The image shows the changes that occurred in the visual cortex of the brain when a participant viewed the letter B, left column, and all six letters, right column.

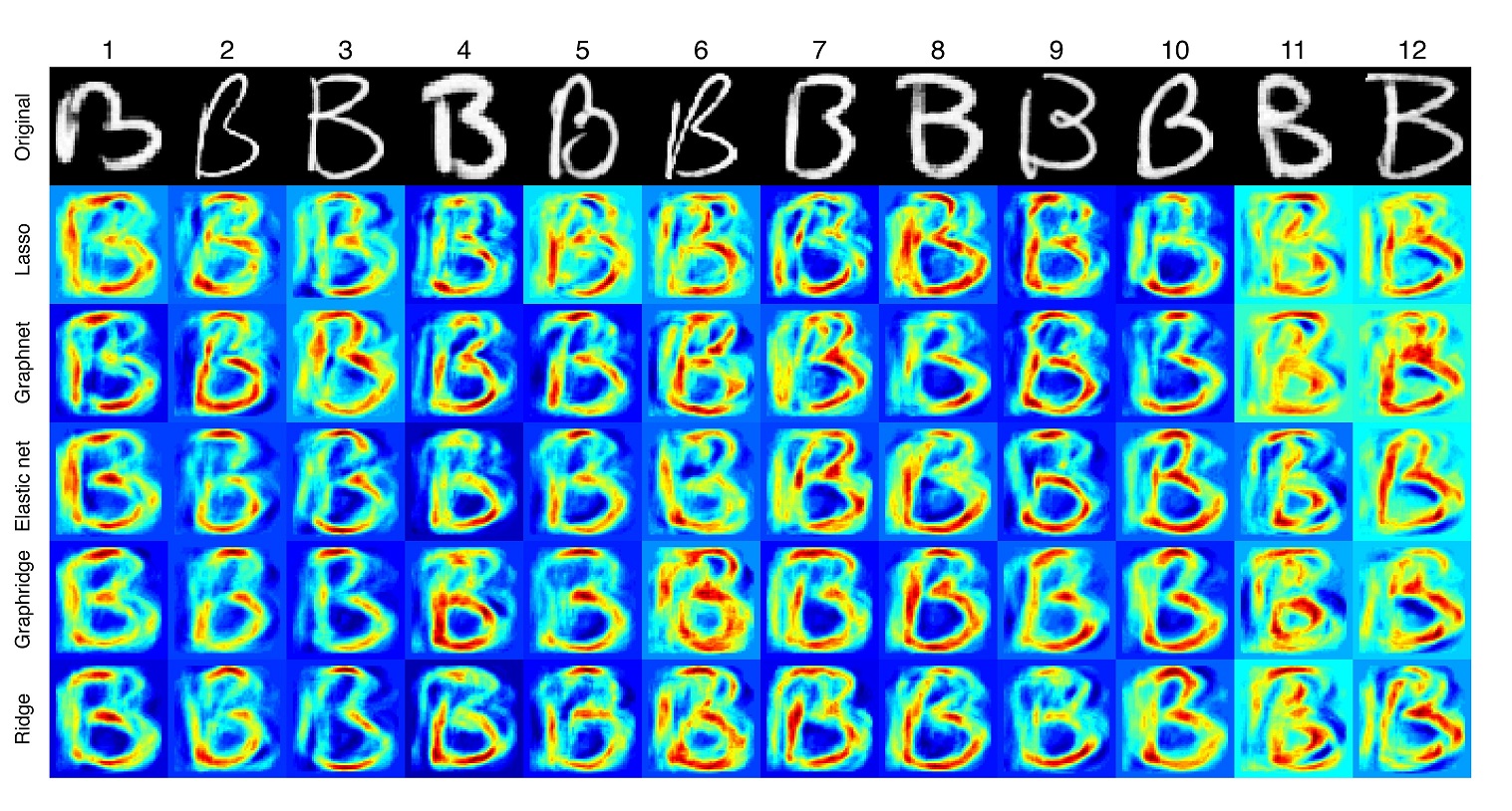

Multiple handwritten characters

The image shows all reconstructions of different presentations of the character ‘B’ for one participant using various algorithms.

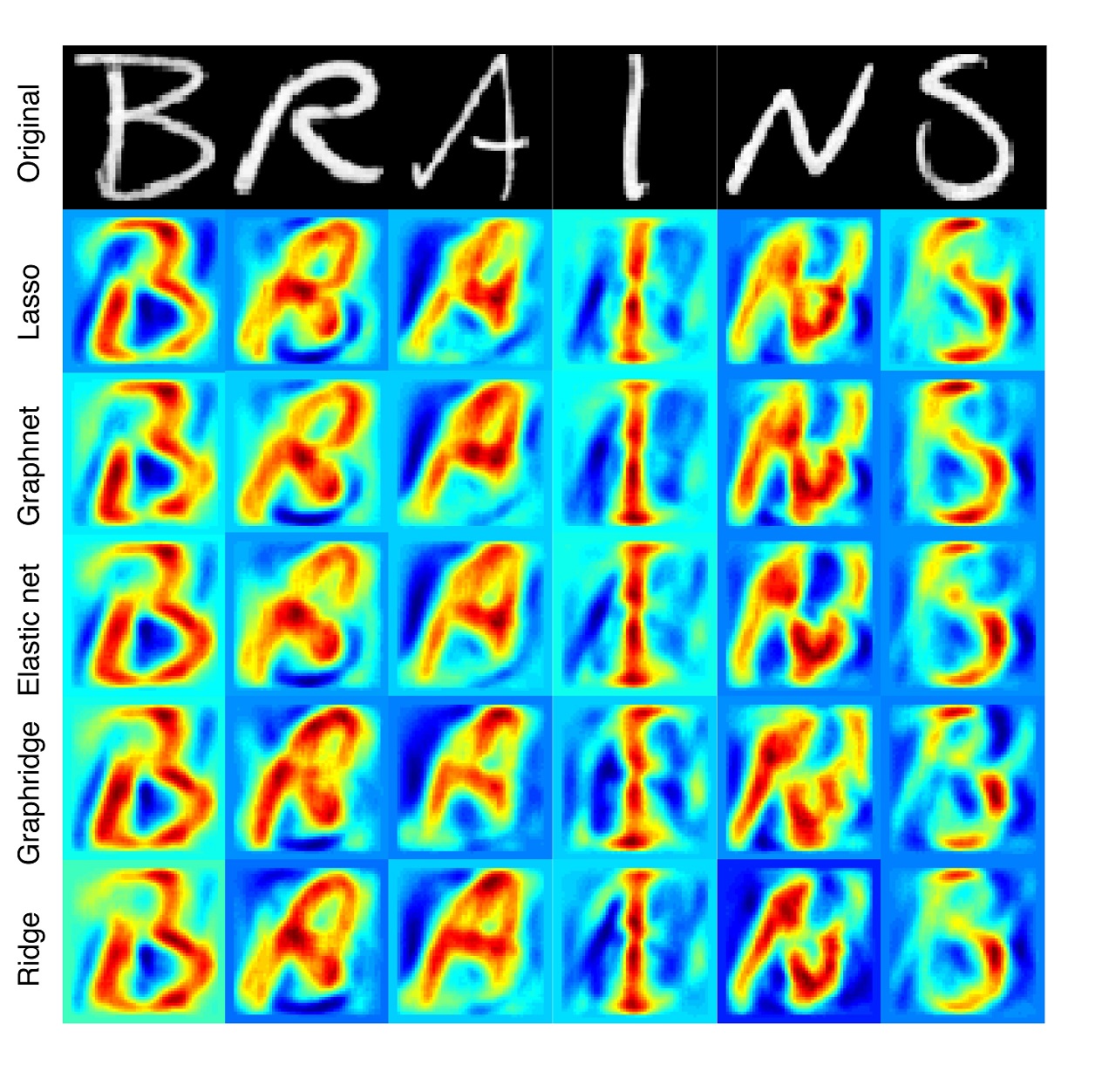

Trained models

The image shows reconstructions of different letters when models were trained on all characters. All different algorithms as shown in each row produced good reconstructions of the originals.

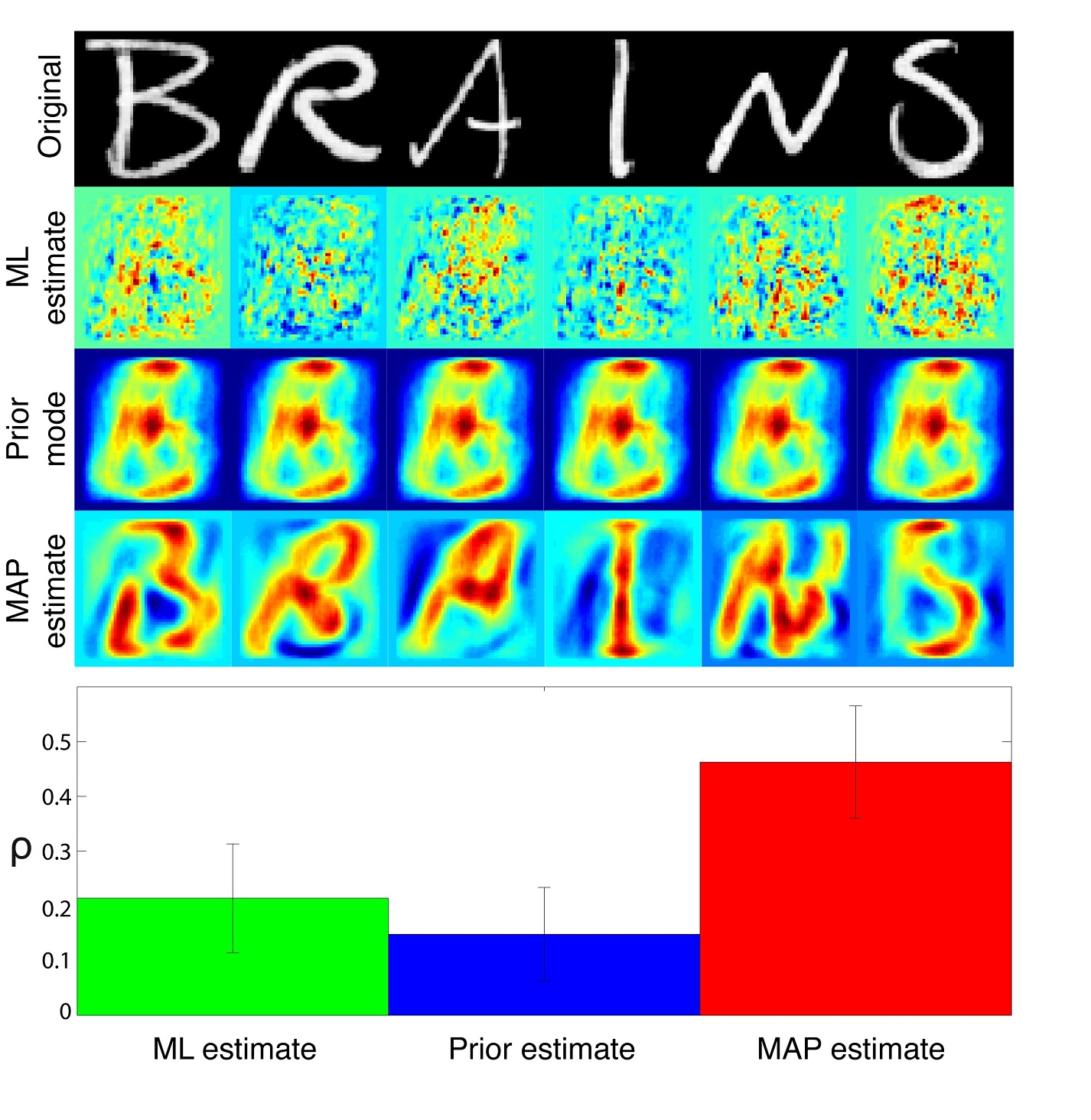

Prior knowledge

The high quality of the reconstructions (shown in the last row) was both driven by good estimation of how the brain responds to visual information (shown in the second row), as well as by teaching the model what the letters look like (shown in the third row).

New letters

Model’s performance remained good even when it had to reconstruct a letter that it hadn’t seen before. The reconstructions were better if the model was trained on the new letter class.

Get the world’s most fascinating discoveries delivered straight to your inbox.

Live Science Plus

Live Science Plus