World's largest computer chip WSE-3 will power massive AI supercomputer 8 times faster than the current record-holder

Cerebras' Wafer Scale Engine 3 (WSE-3) chip contains four trillion transistors and will power the 8-exaFLOP Condor Galaxy 3 supercomputer one day.

Get the world’s most fascinating discoveries delivered straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Delivered Daily

Daily Newsletter

Sign up for the latest discoveries, groundbreaking research and fascinating breakthroughs that impact you and the wider world direct to your inbox.

Once a week

Life's Little Mysteries

Feed your curiosity with an exclusive mystery every week, solved with science and delivered direct to your inbox before it's seen anywhere else.

Once a week

How It Works

Sign up to our free science & technology newsletter for your weekly fix of fascinating articles, quick quizzes, amazing images, and more

Delivered daily

Space.com Newsletter

Breaking space news, the latest updates on rocket launches, skywatching events and more!

Once a month

Watch This Space

Sign up to our monthly entertainment newsletter to keep up with all our coverage of the latest sci-fi and space movies, tv shows, games and books.

Once a week

Night Sky This Week

Discover this week's must-see night sky events, moon phases, and stunning astrophotos. Sign up for our skywatching newsletter and explore the universe with us!

Join the club

Get full access to premium articles, exclusive features and a growing list of member rewards.

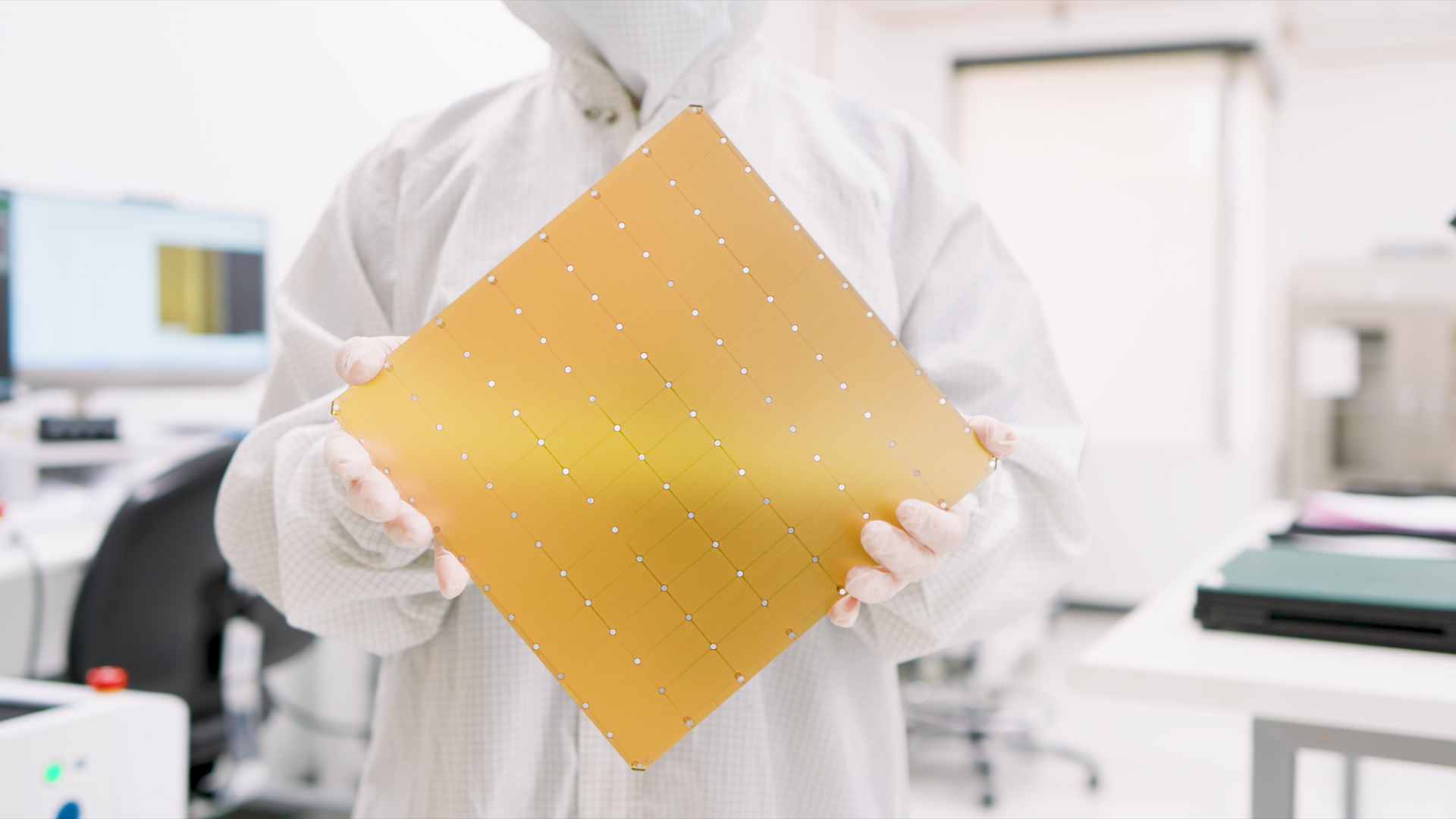

Scientists have built the world's largest computer chip — a behemoth packed with 4 trillion transistors. The massive chip will one day be used to run a monstrously powerful artificial intelligence (AI) supercomputer, its makers say.

The new Wafer Scale Engine 3 (WSE-3) is the third generation of the supercomputing company Cerebras' platform designed to power AI systems, such as OpenAI's GPT-4 and Anthropic's Claude 3 Opus.

The chip, which includes 900,000 AI cores, is composed of a silicon wafer measuring 8.5 by 8.5 inches (21.5 by 21.5 centimeters) — just like its 2021 predecessor the WSE-2.

Related: New DNA-infused computer chip can perform calculations and make future AI models far more efficient

The new chip uses the same amount of power as its predecessor but is twice as powerful, company representatives said in a press release. The previous chip, by contrast, included 2.6 trillion transistors and 850,000 AI cores — meaning the company has roughly adhered to Moore's Law, which states that the number of transistors in a computer chip roughly doubles every two years.

In comparison, one of the most powerful chips currently used to train AI models is the Nvidia H200 graphics processing unit (GPU). Yet Nvidia's monster GPU has a paltry 80 billion transistors, 57-fold less than Cerebras'.

The WSE-3 chip will one day be used to power the Condor Galaxy 3 supercomputer, which will be based in Dallas, Texas, company representatives said in a separate statement released March 13.

Get the world’s most fascinating discoveries delivered straight to your inbox.

The Condor Galaxy 3 supercomputer, which is under construction, will be made up of 64 Cerebras CS-3 AI system "building blocks" that are powered by the WSE-3 chip. When stitched together and activated, the entire system will produce 8 exaFLOPs of computing power.

Then, when combined with the Condor Galaxy 1 and Condor Galaxy 2 systems, the entire network will reach a total of 16 exaFLOPs.

(Floating-point operations per second (FLOPs) is a measurement that calculates the numerical computing performance of a system — where 1 exaFLOP is one quintillion (1018) FLOPs.)

By contrast, the most powerful supercomputer in the world right now is Oak Ridge National Laboratory's Frontier supercomputer, which generates roughly 1 exaFLOP of power.

The Condor Galaxy 3 supercomputer will be used to train future AI systems that are up to 10 times bigger than GPT-4 or Google's Gemini, company representatives said. GPT-4, for instance, uses around 1.76 trillion variables (known as parameters) to train the system, according to a rumored leak; the Condor Galaxy 3 could handle AI systems with around 24 trillion parameters.

Keumars is the technology editor at Live Science. He has written for a variety of publications including ITPro, The Week Digital, ComputerActive, The Independent, The Observer, Metro and TechRadar Pro. He has worked as a technology journalist for more than five years, having previously held the role of features editor with ITPro. He is an NCTJ-qualified journalist and has a degree in biomedical sciences from Queen Mary, University of London. He's also registered as a foundational chartered manager with the Chartered Management Institute (CMI), having qualified as a Level 3 Team leader with distinction in 2023.

Live Science Plus

Live Science Plus