Physicists Gear Up For Huge Data Flow

Get the world’s most fascinating discoveries delivered straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Delivered Daily

Daily Newsletter

Sign up for the latest discoveries, groundbreaking research and fascinating breakthroughs that impact you and the wider world direct to your inbox.

Once a week

Life's Little Mysteries

Feed your curiosity with an exclusive mystery every week, solved with science and delivered direct to your inbox before it's seen anywhere else.

Once a week

How It Works

Sign up to our free science & technology newsletter for your weekly fix of fascinating articles, quick quizzes, amazing images, and more

Delivered daily

Space.com Newsletter

Breaking space news, the latest updates on rocket launches, skywatching events and more!

Once a month

Watch This Space

Sign up to our monthly entertainment newsletter to keep up with all our coverage of the latest sci-fi and space movies, tv shows, games and books.

Once a week

Night Sky This Week

Discover this week's must-see night sky events, moon phases, and stunning astrophotos. Sign up for our skywatching newsletter and explore the universe with us!

Join the club

Get full access to premium articles, exclusive features and a growing list of member rewards.

This Behind the Scenes article was provided to LiveScience in partnership with the National Science Foundation.

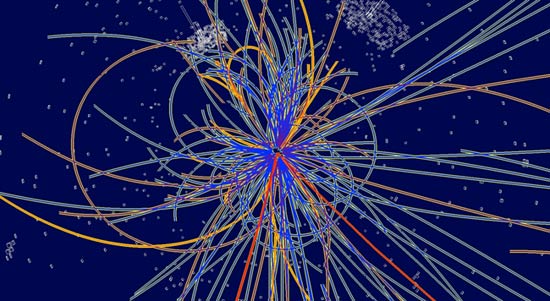

University of Nebraska-Lincoln particle physicists Ken Bloom and Aaron Dominguez have teamed up with computer scientist David Swanson to build a computing center for the benefit of scientists at their university and across the country. The center’s goal is to manage the flood of information that will pour from the world’s next-generation particle accelerator, the Large Hadron Collider (LHC), an underground ring 27 kilometers around located at the European Centre for Nuclear Research (CERN) in Geneva, Switzerland, starting in mid-2008. Detectors stationed around the LHC ring will produce 15 trillion gigabytes of data every year, data that will be farmed out to computing centers worldwide. The Nebraska center will allow physicists from many universities to analyze data from the Compact Muon Solenoid experiment — a more than 12,000-ton detector that will record tracks created by hundreds of particles emerging from each collision in the accelerator — while also providing computing power for researchers in other scientific fields. As CMS began looking for computing centers to host its data, professors Bloom and Dominguez considered whether such a project would suit the University of Nebraska. "After talking with David Swanson, who directs our Research Computing Facility, we realized that this was an ideal project for us to collaborate on," said Bloom. In the LHC computing model, data from the experiments will flow through tiers. The Tier 0 center at CERN takes the data directly from the experiments, stores a copy and sends it to Tier 1 sites. CMS has seven Tier 1 sites in seven nations, and each site partitions its portion of the data based on the types of particles detected and sends these sub-samples off to Tier 2 sites. At the Tier 2 sites, researchers and students finally get their hands on the data. CMS has thirty such sites, seven in the U.S., and one of these is at the University of Nebraska. Physicists will use the data stored at these sites to search for never-before-seen particles or extra dimensions of space by submitting specialized programs to run on the data. With forty U.S. institutions involved in the CMS collaboration, the seven centers will see a lot of traffic. One of the challenges of building a Tier 2 site is preparing it for heavy data flow. Already, the Nebraska group has achieved the fastest transfer rates in the national network that connects Fermi National Accelerator Laboratory, the U.S. Tier 1 site, with the U.S. Tier 2 sites. "We are really good at moving data from Fermilab to our Tier 2 center," says Bloom without pretension – they have achieved the fastest rates for any Tier 1-to-Tier 2 connection worldwide. "We can manage a terabyte an hour easily, and a terabyte in half an hour is possible."

Because the LHC will produce so much data, the terabyte, or 1,000 gigabytes, is the standard unit of data among scientists involved with LHC computing. Apart from building lightning-fast network connections, Bloom and Dominguez are creating a computing center whose benefits extend beyond particle physics research. According to Dominguez, "It’s a challenge to build a facility that could be effectively shared with a worldwide community and local researchers, to make something bigger, better, more useful to university research as a whole." With this goal in mind, they designed a system that could support parallel computations. Programs that perform multiple, simultaneous calculations on different processors and bring the results together at the end are powerful tools for chemists and nanoscientists in particular. As the CMS Tier 2 site at University of Nebraska is part of the U.S. Open Science Grid, researchers from many fields will analyze data using its computing power during times when CMS researchers aren’t keeping the site busy.

- Video: Wild Technology

- Despite Rumors, Black Hole Factory Will Not Destroy Earth

- Building a Machine to Search for Cosmic Secrets

Editor's Note: This research was supported by the National Science Foundation (NSF), the federal agency charged with funding basic research and education across all fields of science and engineering. See the Behind the Scenes Archive.

Get the world’s most fascinating discoveries delivered straight to your inbox.

Live Science Plus

Live Science Plus