Movie Clips Reconstructed From Brain Waves

Get the world’s most fascinating discoveries delivered straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Delivered Daily

Daily Newsletter

Sign up for the latest discoveries, groundbreaking research and fascinating breakthroughs that impact you and the wider world direct to your inbox.

Once a week

Life's Little Mysteries

Feed your curiosity with an exclusive mystery every week, solved with science and delivered direct to your inbox before it's seen anywhere else.

Once a week

How It Works

Sign up to our free science & technology newsletter for your weekly fix of fascinating articles, quick quizzes, amazing images, and more

Delivered daily

Space.com Newsletter

Breaking space news, the latest updates on rocket launches, skywatching events and more!

Once a month

Watch This Space

Sign up to our monthly entertainment newsletter to keep up with all our coverage of the latest sci-fi and space movies, tv shows, games and books.

Once a week

Night Sky This Week

Discover this week's must-see night sky events, moon phases, and stunning astrophotos. Sign up for our skywatching newsletter and explore the universe with us!

Join the club

Get full access to premium articles, exclusive features and a growing list of member rewards.

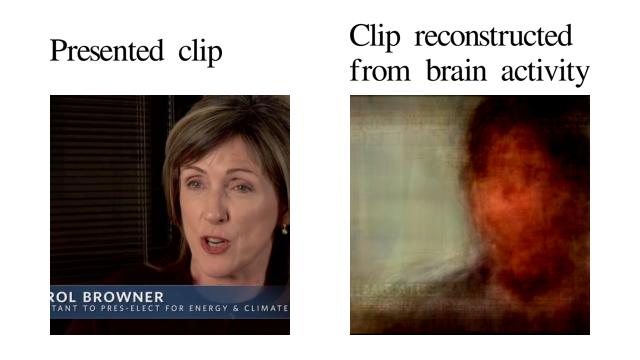

Welcome to the future: Scientists can now peer inside the brain and reconstruct videos of what a person has seen, based only on their brain activity.

The reconstructed videos could be seen as a primitive — and somewhat blurry — form of mind reading, though researchers are decades from being able to decode anything as personal as memories or thoughts, if such a thing is even possible. Currently, the mind-reading technique requires powerful magnets, hours of time and millions of seconds of YouTube videos.

But in the long term, similar methods could be used to communicate with stroke patients or coma patients living in a "locked-in" state, said study researcher Jack Gallant, a neuroscientist at the University of California, Berkeley.

"The idea is that they would be able to visualize a movie of what they want to talk about, and you would be able to decode that," Gallant told LiveScience.

Decoding the brain

Gallant's team has decoded the brain before. In 2008, the researchers reported that they'd developed a computer model that takes in brain activity data from functional magnetic resonance imaging (fMRI), compares it to a library of photos, and spits out the photo that the person was most likely looking at when the brain activity measurements were taken. That technique was accurate at picking the right photo nine out of 10 times.

But reconstructing video instead of still images is much tougher, Gallant said. That's because fMRI doesn't measure the activity of brain cells directly; it measures blood flow to active areas of the brain. This blood flow happens much more slowly than the zippy communication of the billions of neurons in the brain. [Inside the Brain: A Journey Through Time]

Get the world’s most fascinating discoveries delivered straight to your inbox.

So Gallant and postdoctoral researcher Shinji Nishimoto built a computer program to bridge that gap. Part of the program was a model of thousands of virtual neurons. The other half was a model of how the activity of neurons affects the blood flow to active regions of the brain. Using this virtual bridge, the researchers were able to translate information from the slow blood flow into the speedy language of neuron activity.

Movie night … for science

Next came the fun part: Three volunteers, all neuroscientists on the project, watched hours of video clips while inside an fMRI machine. Outside volunteers weren't used because of the amount of time and effort involved, and because the neuroscientists were highly motivated to focus on the videos, ensuring better brain images.

Using the brain-imaging data, Gallant and his colleagues built a "dictionary" that linked brain activity patterns to individual video clips — much like their 2008 study did with pictures. This brain-movie translator was able to identify the movie that produced a given brain signal 95 percent of the time, plus or minus one second in the clip, when given 400 seconds of clips to choose from. Even when the computer model was given 1 million seconds of clips, it picked the right second more than 75 percent of the time.

With this accurate brain-to-movie-clip dictionary in hand, the researchers then introduced a new level of challenge. They gave the computer model 18 million seconds of new clips, all randomly downloaded from YouTube videos. None of the experiment participants had ever seen these clips.

The researchers then ran the participants' brain activity through the model, commanding it to pick the clips most likely to trigger each second of activity. The result was a from-scratch reconstruction of the person's visual experience of the movie. In other words, if the participants had seen a clip that showed Steve Martin sitting on the right side of the screen, the program could look at their brain activity and pick the YouTube clip that looked most like Martin sitting on the right side of the screen.

You can see the video clips here and here. In the first clip, the original video is on the left, while an average of the top 100 clips that were closest based on brain activity is on the right. (Averages were necessary, and also the reason for the blur, Gallant said, because even 18 million seconds of YouTube videos doesn’t come close to capturing all of the visual variety in the original clips.) The second segment of the video shows the original clip at the top and reconstructions below. The far-left column is average reconstructions, while the remaining columns are individual videos picked out by the program as being closest to the original.

Watching a mind movie

The average videos look like ghostly but recognizable facsimiles of the originals. The blurriness is largely because the YouTube library of clips is so limited, making exact matches tough, Gallant said.

"Eighteen million seconds is really a vanishingly small fraction of the things you could see in your life," he said.

The mind-reading method is limited only to the basic visual areas of the brain, not the higher-functioning centers of thought and reason such as the frontal cortex. However, Gallant and his colleagues are working to build models that would mimic other brain areas. In the short term, these models could be used to understand how the brain works, much as environmental scientists use computer models of the atmosphere to understand weather and climate.

In the long term, the hope is that such technology could be used to build brain-machine interfaces that would allow people with brain damage to communicate by thinking and having those thoughts translated through a computer, Gallant said. Potentially, you could measure brain activity during dreams or hallucinations and then watch these fanciful states on the big screen.

If those predictions come true, Gallant said, there could be ethical issues involved. He and his colleagues are staunchly opposed to measuring anyone's brain activity without their knowledge and consent. Right now, though, secret brain wiretapping is far-fetched, considering that the technique requires a large, noisy fMRI machine and the full cooperation of the subject.

Not only that, but reading thoughts, memories and dreams may not be as simple as decoding simple visual experiences, Gallant said. The link between how our brain processes what we see and how it processes what we imagine isn't clear.

"This model will be a starting point for trying to decode visual imagery," Gallant said. "But how close to the ending point is hard to tell."

You can follow LiveScience senior writer Stephanie Pappas on Twitter @sipappas. Follow LiveScience for the latest in science news and discoveries on Twitter @livescience and on Facebook.

Stephanie Pappas is a contributing writer for Live Science, covering topics ranging from geoscience to archaeology to the human brain and behavior. She was previously a senior writer for Live Science but is now a freelancer based in Denver, Colorado, and regularly contributes to Scientific American and The Monitor, the monthly magazine of the American Psychological Association. Stephanie received a bachelor's degree in psychology from the University of South Carolina and a graduate certificate in science communication from the University of California, Santa Cruz.

Live Science Plus

Live Science Plus