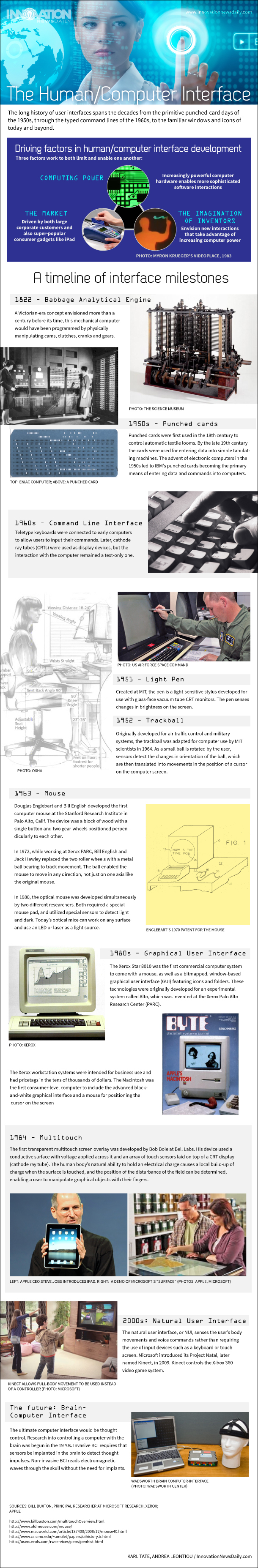

How the Human/Computer Interface Works (Infographics)

The long history of user interfaces spans the decades from the primitive punched-card days of the 1950s, through the typed command lines of the 1960s, to the familiar windows and icons of today and beyond.

Three factors work to both limit and enable human/computer interface development:

- Computing Power: Increasingly powerful computer hardware enables more sophisticated software interactions.

- The Imagination of Inventors: Software designers envision new interactions that take advantage of increasing computer power.

- The Market: Driven by both large corporate customers and also super-popular consumer gadgets like iPad.

A timeline of computer interface milestones:

1822: The Babbage Analytical Engine was a Victorian-era concept envisioned more than a century before its time, this mechanical computer would have been programmed by physically manipulating cams, clutches, cranks and gears.

Get the world’s most fascinating discoveries delivered straight to your inbox.

1950s: Punched cards were first used in the 18th century to control automatic textile looms. By the late 19th century the cards were used for entering data into simple tabulating machines. The advent of electronic computers in the 1950s led to IBM’s punched cards becoming the primary means of entering data and commands into computers.

1960s: The Command Line Interface (CLI). Teletype keyboards were connected to early computers to allow users to input their commands. Later, cathode ray tubes (CRTs) were used as display devices, but the interaction with the computer remained a text-only one.

1951: The Light Pen. Created at MIT, the pen is a light-sensitive stylus developed for use with glass-face vacuum tube CRT monitors. The pen senses changes in brightness on the screen.

1952: The Trackball. Originally developed for air traffic control and military systems, the trackball was adapted for computer use by MIT scientists in 1964. As a small ball is rotated by the user, sensors detect the changes in orientation of the ball, which are then translated into movements in the position of a cursor on the computer screen.

1963: The Mouse. Douglas Englebart and Bill English developed the first computer mouse at the Stanford Research Institute in Palo Alto, Calif. The device was a block of wood with a single button and two gear-wheels positioned perpendicularly to each other.

In 1972, while working at Xerox PARC, Bill English and Jack Hawley replaced the two roller wheels with a metal ball bearing to track movement. The ball enabled the mouse to move in any direction, not just on one axis like the original mouse.

In 1980, the optical mouse was developed simultaneously by two different researchers. Both required a special mouse pad, and utilized special sensors to detect light and dark. Today’s optical mice can work on any surface and use an LED or laser as a light source.

1980s: The Graphical User Interface. The Xerox Star 8010 was the first commercial computer system to come with a mouse, as well as a bitmapped, window-based graphical user interface (GUI) featuring icons and folders. These technologies were originally developed for an experimental system called Alto, which was invented at the Xerox Palo Alto Research Center (PARC).

The Xerox workstation systems were intended for business use and had pricetags in the tens of thousands of dollars. The Apple Macintosh was the first consumer-level computer to include the advanced black-and-white graphical interface and a mouse for positioning the cursor on the screen.

1984: Multitouch. The first transparent multitouch screen overlay was developed by Bob Boie at Bell Labs. His device used a conductive surface with voltage applied across it and an array of touch sensors laid on top of a CRT display (cathode ray tube). The human body’s natural ability to hold an electrical charge causes a local build-up of charge when the surface is touched, and the position of the disturbance of the field can be determined, enabling a user to manipulate graphical objects with their fingers.

2000s: Natural User Interface. The natural user interface, or NUI, senses the user’s body movements and voice commands rather than requiring the use of input devices such as a keyboard or touch screen. Microsoft introduced its Project Natal, later named Kinect, in 2009. Kinect controls the X-box 360 video game system.

The future: Direct Brain-Computer Interface. The ultimate computer interface would be thought control. Research into controlling a computer with the brain was begun in the 1970s. Invasive BCI requires that sensors be implanted in the brain to detect thought impulses. Non-invasive BCI reads electromagnetic waves through the skull without the need for implants.

Live Science Plus

Live Science Plus