Inside Your Mind, Scientist Can Eavesdrop on What You Hear

By analyzing the brain, scientists can tell what words a person has just heard, research now reveals.

Such work could one day allow scientists to eavesdrop on the internal monologues that run through our minds, or hear the imagined speech of those unable to speak.

"This is huge for patients who have damage to their speech mechanisms because of a stroke or Lou Gehrig's disease and can't speak," said researcher Robert Knight at the University of California at Berkeley. "If you could eventually reconstruct imagined conversations from brain activity, thousands of people could benefit."

Recent studies have shown that scientists could tell what number a person has just seen by carefully analyzing brain activity. They similarly could figure out how many dots a person was presented with.

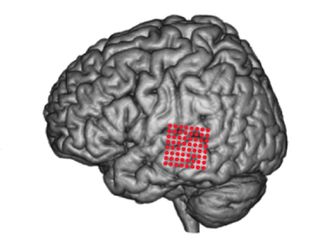

To see if they could do the same for sound, researchers focused on decoding electrical activity in a region of the human auditory system called the superior temporal gyrus, or STG. The 15 volunteers in the study were patients undergoing neurosurgery for epilepsy or brain tumor — as such, researchers could directly access the STG with electrodes and see how it responded to words in normal conversation that volunteers listened to.

The scientists tested two different methods to match spoken sounds to the pattern of electrical activity they detected. The volunteers had recorded words played to them and the researchers used two different computational models to predict each word based on electrode recordings.

[Video: The Computer That Can Read Your Mind]

Sign up for the Live Science daily newsletter now

Get the world’s most fascinating discoveries delivered straight to your inbox.

"Speech is full of rhythms, both fast and slow, and our models essentially looked at how the brain might encode these different rhythms," explained researcher Brian Pasley, a neuroscientist at the University of California at Berkeley. "One analogy is the AC or DC power in your home or car battery. In one case, the rhythms are coded by oscillating brain activity, AC mode, and in the other case, the rhythms are coded by changes in the overall level of brain activity, DC mode."

The better of the two methods turned out to involve looking at the overall level of brain activity. "We found both models worked well for relatively slow speech rhythms like syllable rate, but for the faster rhythms in speech, like rapid onsets of syllables, the DC mode worked better," Pasley said.

The researchers were able to reconstruct a sound close enough to the original word for the investigators to correctly guess the word better than chance. Future prosthetic devices "could either synthesize the actual sound a person is thinking, or just write out the words with a type of interface device," Pasley said.

"I didn't think it could possibly work, but Brian did it," Knight said. "His computational model can reproduce the sound the patient heard and you can actually recognize the word, although not at a perfect level."

To be clear, "we are only decoding sounds a person actually hears, not what they are imagining or thinking," Pasley told TechNewsDaily. "This research is not mind-reading or thought-reading — we can decode the sounds people are actually listening to but we cannot tell what they are thinking to themselves. Some evidence suggests that the same brain regions activate when we listen to sounds and when we imagine sounds, but we don't yet have a good understanding of how similar those two situations really are."

A major step "would be to extend this approach to internal verbalizations, but we don't yet know if that is possible," Pasley acknowledged. "If it were possible, the brain signals required for this are currently only accessible through invasive procedures."

Still, a great deal of work on developing safe and practical brain prosthetic devices has been done, which could be used with these procedures. "As this technology improves, these devices will become practical for the severely disabled," Pasley said.

Pasley did caution this research could not currently be used "for interrogations or any other thought-reading, because at this stage we are only looking at someone's perceptual experience, not their internal verbalizations. If that becomes possible in the future, the procedure requires an invasive medical implant."

The scientists detailed their findings online Jan. 31 in the journal PLoS Biology.

This story was provided by InnovationNewsDaily, a sister site to LiveScience.